Are you evaluating AI infrastructure platforms to accelerate AI adoption in your organization, or looking for alternatives to GPU-based systems for AI workloads? Many enterprises want to advance AI development and adoption but face significant challenges: complex and costly infrastructure, GPU supply shortages and rising prices, lengthy deployment cycles, integration hurdles, and limited in-house AI/ML expertise. This drives the need for a solution that is fast, scalable, efficient, and truly enterprise-ready.

In this article, we introduce SambaNova Systems, a full-stack AI platform designed for enterprises. It combines custom-built hardware (Reconfigurable Dataflow Units, or SambaNova RDUs), a managed service model (Dataflow-as-a-Service™), and access to pre-trained foundation models. We’ll explore how SambaNova simplifies AI deployment so you can assess whether it’s the right fit for your organization and understand the potential business benefits it can deliver.

TL;DR: How SambaNova AI can optimize your business to maximize efficiency

SambaNova Systems is an AI hardware and software company offering an integrated platform - Dataflow-as-a-Service - that simplifies and accelerates AI adoption.

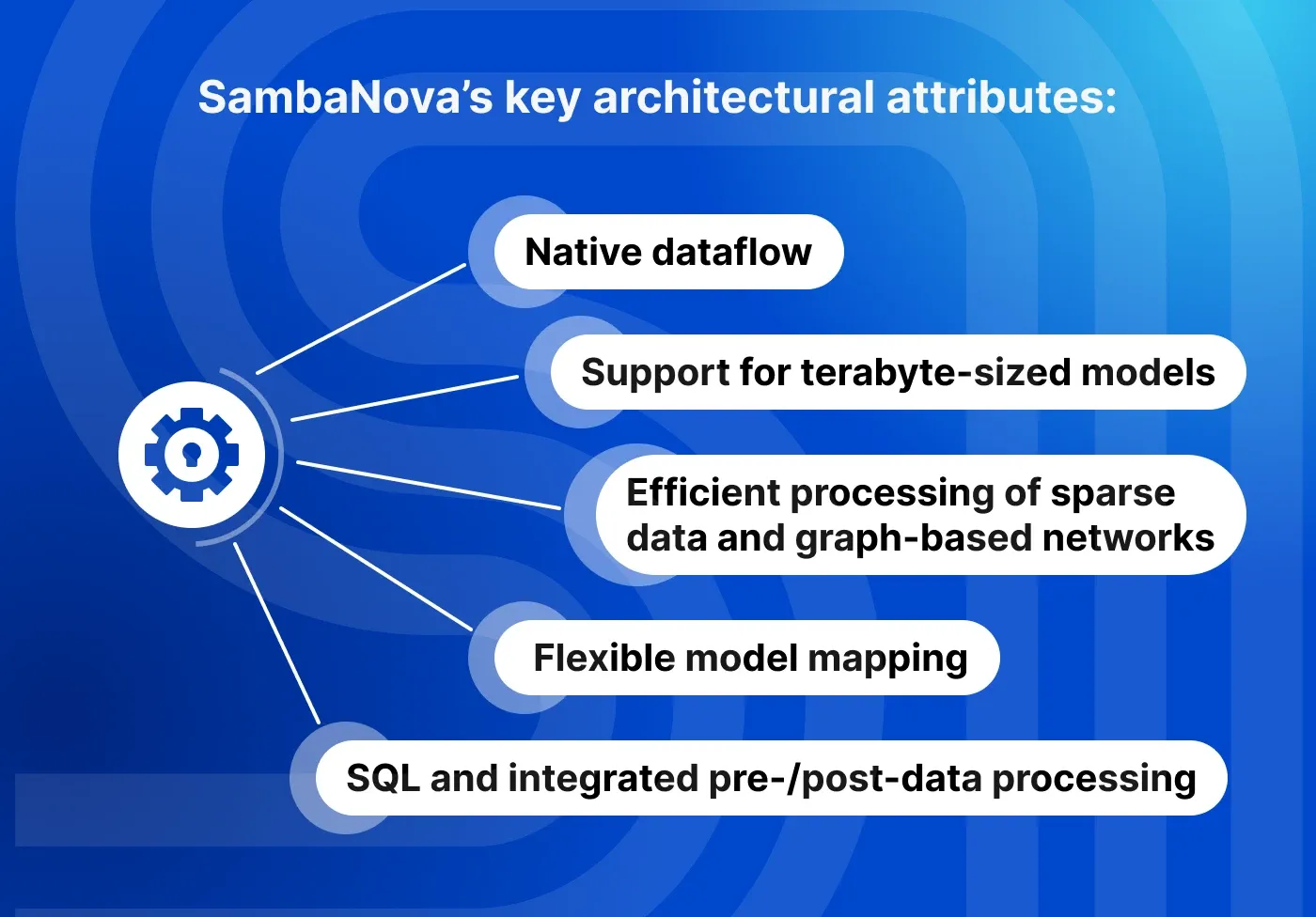

Key attributes for a next-generation architecture include native dataflow, support for terabyte-sized models, efficient processing of sparse data and graph-based networks, flexible model mapping, and incorporation of SQL and other pre-/post data processing.

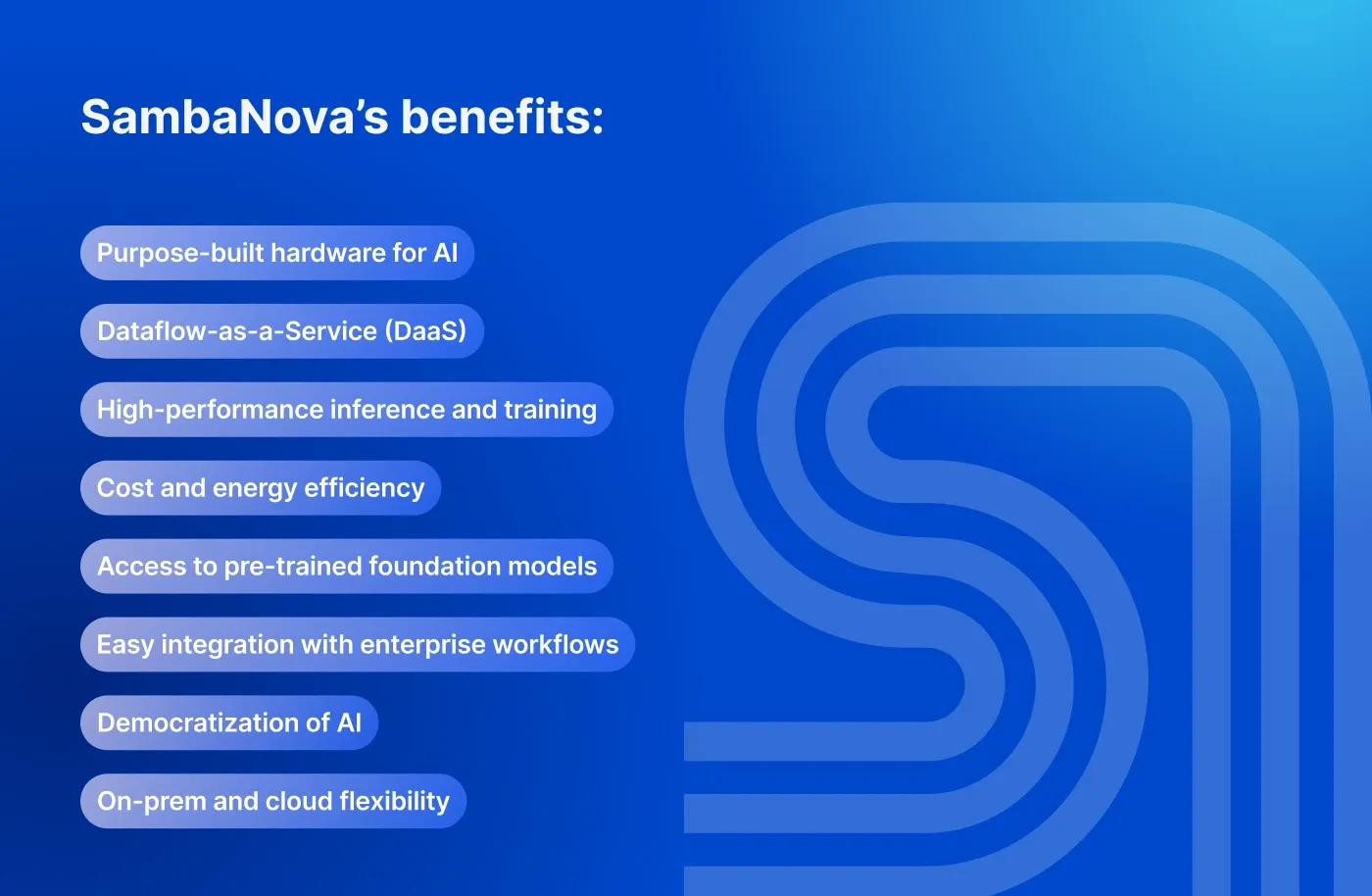

SambaNova benefits are purpose-built hardware for AI, dataflow-as-a-Service (DaaS), high-performance inference and training, cost and energy efficiency, access to pre-trained foundation models, easy integration with enterprise workflows, democratization of AI, and on-prem and cloud flexibility.

What is SambaNova?

SambaNova is a pioneering AI hardware and software company offering an integrated platform, Dataflow-as-a-Service, that dramatically simplifies and accelerates AI adoption, development, training, and deployment.

Key attributes of the SambaNova architecture

SambaNova’s architecture is defined by the following main attributes:

Native dataflow. Machine-learning frameworks and DSLs can leverage parallel patterns that optimize computation and memory access across both dense and sparse data. This ensures maximum platform utilization while supporting models written in any framework.

Support for terabyte-sized models. Larger models deliver greater accuracy and richer functionality. SambaNova enables training and inference for extremely large models, thanks to extensive on-chip and off-chip memory capacity.

Efficient processing of sparse data and graph-based networks. Many AI applications rely on sparse data structures, which can be inefficient on traditional architectures. SambaNova’s approach intelligently minimizes unnecessary computation.

Flexible model mapping. Scaling workloads across infrastructure typically requires complex programming. SambaNova automates this process, eliminating the need for developers to be system architecture experts.

SQL and integrated pre-/post-data processing. By unifying deep learning and ETL tasks on a single platform, SambaNova reduces data pipeline complexity, cost, and latency.

SambaNova AI benefits

SambaNova AI offers the following benefits:

Purpose-built hardware for AI

Unlike traditional GPUs or CPUs, SambaNova has a tailor-made SambaNova RDU (Reconfigurable Dataflow Unit) to run AI workloads. This implies increased speed, reduced latency, and efficiency of processing machine and deep learning data.

Dataflow-as-a-Service (DaaS)

The SambaNova system provides a registered machine learning platform as a service. Companies do not have to invest or implement their own infrastructure; they can have ready-to-use AI products in the cloud or on-premises.

High-performance inference and training

SambaNova enables the training and inference to be done at enterprise scale. On the same platform, you can train large models and deploy them for real-time use with better speed and cost efficiency as opposed to GPUs.

Cost and energy efficiency

Using less power than traditional GPU-based systems and providing more performance per dollar, the SambaNova system has reduced total cost of ownership (TCO) and enhanced the sustainability of big AI projects.

Access to pre-trained foundation models

SambaNova offers pre-trained LLMs, which are ready to fine-tune or use out of the box. There is no requirement to train models, which saves resources.

Easy integration with enterprise workflows

The platform is enterprise-ready and can be deployed in use cases such as document processing, recommendation systems, anti-fraud systems, and many more, enabling fast deployment in real-world business environments.

Democratization of AI

SambaNova is making powerful AI accessible to organizations that lack extensive knowledge of AI. Medium-sized businesses and governmental agencies have the opportunity to use highly advanced AI today, like the giants of technology.

On-prem and cloud flexibility

The SambaNova solutions may be deployed on-premises or in the cloud, depending on the industry’s data sensitivity (such as finance or healthcare). The greatest level of control, compliance, and flexibility.

SambaNova vs traditional solutions

While NVIDIA dominates with GPU-based systems, SambaNova Systems offers a fundamentally different and more scalable approach tailored specifically to enterprise AI needs. Traditional AI systems are based on general-purpose GPUs, which are not optimized for large-scale generative AI. These systems usually encounter challenges such as constrained memory bandwidth, latency, and compute efficiency to perform real-time inference.

SambaNova adopts a different architecture with its SN40L AI chip – a custom processor that is designed for high-performance AI inference. Unlike traditional architectures, SN40L features a dataflow design and a three-tier memory system that enables it to run large models, such as Llama 3.1 405B at full precision, achieving up to 132 tokens per second with low latency.

In contrast to the conventional systems, which need model tuning or compromise on accuracy for performance gains, SambaNova’s end-to-end platform runs large models out of the box. Models can be accessed through the API without the complex management of infrastructure and optimization.

SambaNova use cases for enterprise organizations

SambaNova's full-stack platform has a transformative role in various industries. Let’s look at some of the use cases where companies are using the facilities of SambaNova to gain substantial benefits.

LLM inference at scale for customer-facing applications

SambaNova architecture allows enterprises to deploy large language models (LLMs) such as LLaMA or DeepSeek at scale, including chatbots, digital assistants, and content creation tools, while delivering low-latency performance across millions of daily queries without relying on GPU-based infrastructure.

Fine-tuned models for domain-specific AI

The companies within regulated industries or other specific fields apply SambaNova to train foundation models over their own dataset to develop precise NLP, vision, and multi-touch abilities to use on an analysis of a medical document, a legal document, or even a scientific text.

AI-driven process and workflow automation

Organizations expedite the back-office with the employment of SambaNova to facilitate the automation of invoice processing, document review, and operational repetition by leveraging the efficiency of NLP and vision models to minimize operational inconveniences and the time of turnaround.

Enhanced enterprise analytics and search

Companies modernize their search systems, integrating AI into semantic search, smart document classification, and natural-language querying, and, thus, enhance information search, discovery, and internal support automation.

Conversational AI for multilingual customer support

Companies use SambaNova to reinforce conversational agents and voice assistants to ensure precise speech-to-text models, intent detection, and multilingual natural conversation understanding, providing fast and accurate responses to keep the quality of service high at times of peak demand.

Accelerated scientific research and drug discovery

Biotech and pharma enterprises implement SambaNova into generative chemistry, genomics analysis, and large-scale simulation workflows to support on-premise deployment, aligning with strict data governance and regulatory requirements.

Real-time financial risk scoring and fraud detection

Banking and fintech organizations can scale their SambaNova-based infrastructure to deploy advanced fraud detection and anti-money laundering (AML) models in real-time as the number of transactions grows, thereby ensuring ongoing risk oversight.

Main reasons enterprises choose SambaNova

Here are four main reasons forward-looking organizations are adopting SambaNova to future-proof their AI strategy:

1. Full-stack platform

SambaNova offers a complete AI stack, including AI-optimized silicon, software, and models, and provides it in a unified package, without the fragmentation of GPU-driven systems.

Why it matters: Deployment is faster, latency is reduced, and control is improved – all in one system.

2. Samba-1: enterprise-grade, trillion-parameter model

Samba-1 combines 90+ expert models into a single scalable LLM that already has built-in privacy, controlled access, and task-specific tuning.

Why it matters: Enhances the best-in-class performance of GenAI tailored to your data and business without retraining costs.

3. High model density, smaller footprint

Supports hundreds of models and users simultaneously on the same system unlike the GPU stacks, which need more hardware per model.

Why it matters: Lower costs of infrastructure, improved utilization, and lower TCO.

4. AI-as-a-Service for quick deployment

SambaNova AIaaS enables you to deploy sophisticated models on-prem or in your own cloud without building an in-house machine learning team or taking care of GPU provisioning.

Why it matters: Fast time to value, easier compliance, and reduced staffing requirements.

The bottom line

As AI adoption accelerates across industries, enterprise leaders face increasing pressure to deploy scalable, cost-effective, and secure infrastructure beyond the confines of the legacy GPU-based systems. SambaNova presents the alternative option: a custom-designed hardware system, pre-optimized foundation models, and a full-stack, enterprise-ready architecture, simplifying enterprise-scale deployments.

You may be deploying LLMs at scale, looking to incorporate AI into your business processes, or planning to future-proof your infrastructure to work with the next generation of AI models. In all these cases, SambaNova will offer enterprises the performance, flexibility, and control needed to bring AI workloads to production faster.

Contact us if you are exploring AI development or AI integration, the modernization of the infrastructure, or planning a project involving artificial intelligence (AI). Our experts will assist in the evaluation of the software solutions, mitigate the risks of implementation, and accelerate your path to AI success.

Frequently asked questions

Can SambaNova be integrated with cloud computing solutions and hybrid infrastructure?

Yes. The SambaNova platform is scalable and deployable on-premises, in the cloud, or across hybrid environments. This enables businesses to expand their machine learning and artificial intelligence capabilities and keep sensitive data under control and set up on a cost- and performance-optimized basis.

How quickly can SambaNova’s solution be deployed in a standard data center?

With SambaManaged, its flagship product, SambaNova helps organizations go live with a fully managed AI inference solution as quickly as 90 days, when using this product instead of the traditional timeline of 18 months or more.

What are the effects of inference speed on real-time?

Faster inference enables real-time decision-making in applications such as chatbots, fraud detection, and autonomous systems, which enhances performance and user experience.

Does SambaNova support open-source or proprietary AI models?

SambaNova allows companies to use open-source AI models or build their own. In that manner, they do not become dependent on a single vendor and can stay flexible as technology changes. However, proprietary model usage is also possible in custom deployments.

How does SambaNova achieve computing efficiency for deep learning workloads?

SambaNova optimizes the performance of deep learning computations by utilizing its dataflow architecture and specialized chips (RDUs). SambaNova reduces data movement and maximizes parallel execution, thereby increasing computing efficiency compared to traditional GPU-based approaches.

Was this helpful?

0

No comments yet