When using large language models (LLMs) like GPT-4 and others for your business needs, there are quite a few problems. The data they rely on is often outdated and doesn’t cover in-depth domain-specific topics. Plus, LLMs may deliver inaccurate, generic responses or hallucinate. How can you deal with these problems and adjust the model to your specific tasks?

The techniques, such as retrieved augmented generation (RAG), fine-tuning, and prompt engineering, are precisely what will help you. In this article, we dive deep into the specifics of these three approaches and explain how you can use them for your domain.

TL;DR: RAG vs fine-tuning vs prompt engineering for optimizing AI models

RAG implies using external data for improving LLM performance. Fine-tuning is training a model using some specific data. Prompt engineering is the art of using the prompt to get proper outcomes.

Three approaches differ in terms of implementation complexity, budget and resources needed, quality of the outcomes, data requirements, and update complexity.

RAG is best if you need up-to-date responses within your domain. Fine-tuning is a must if you’re looking to modify a model’s behavior. Prompt engineering fits to handle simple automation, analyze data, and streamline coding.

RAG, fine-tuning, and prompt engineering. The basics

When you ask ChatGPT to provide you with information regarding a topic, for example, about what it knows about John Doe, it will provide you with some generic answers based on the model’s database.

But what if you need to create a chatbot for your website that should deliver some specific information about John Doe as a medical professional? In this case, you need to adjust the LLM model in line with your requirements using one of the three AI customization techniques (or their combination) – RAG, fine-tuning, and prompt engineering. Let’s explore what they are all about.

What is Retrieval-Augmented Generation?

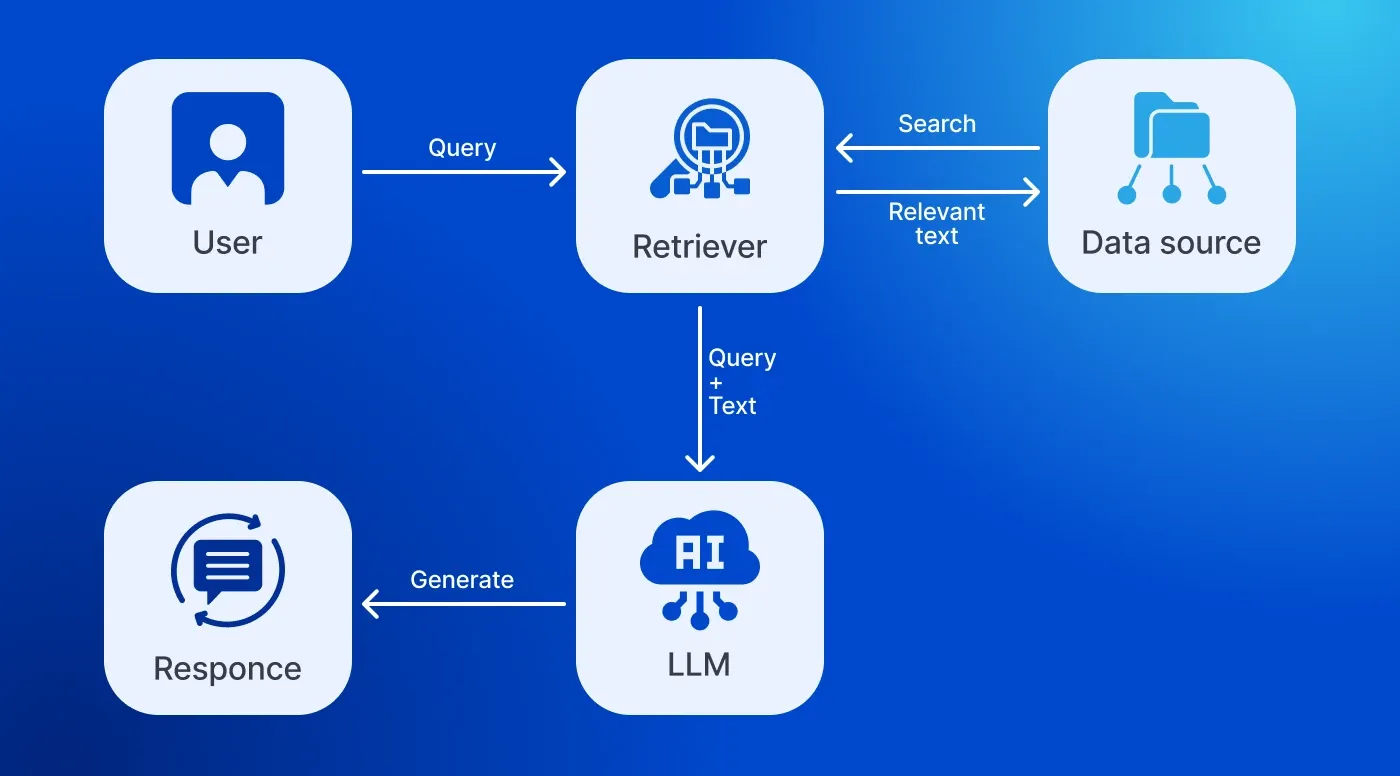

Retrieval-Augmented Generation (RAG) is the process of using external sources of information, such as databases and documentation, for delivering more accurate domain-specific LLMs’ answers.

How does RAG work? You start by collecting data and breaking it down into smaller chunks, each dedicated to a specific topic. Next, these sections of information are converted into their vector representations (embeddings). Once a user query gets into the system, it’s also transformed into a vector representation to compare its meaning and context with the database content. The most similar parts are considered the correct answer to the query, so the RAG system retrieves corresponding text chunks and sends them to the LLM to provide the answer to a user.

Learn more about how vectors are compared in our article about vector similarity search.

What is fine-tuning?

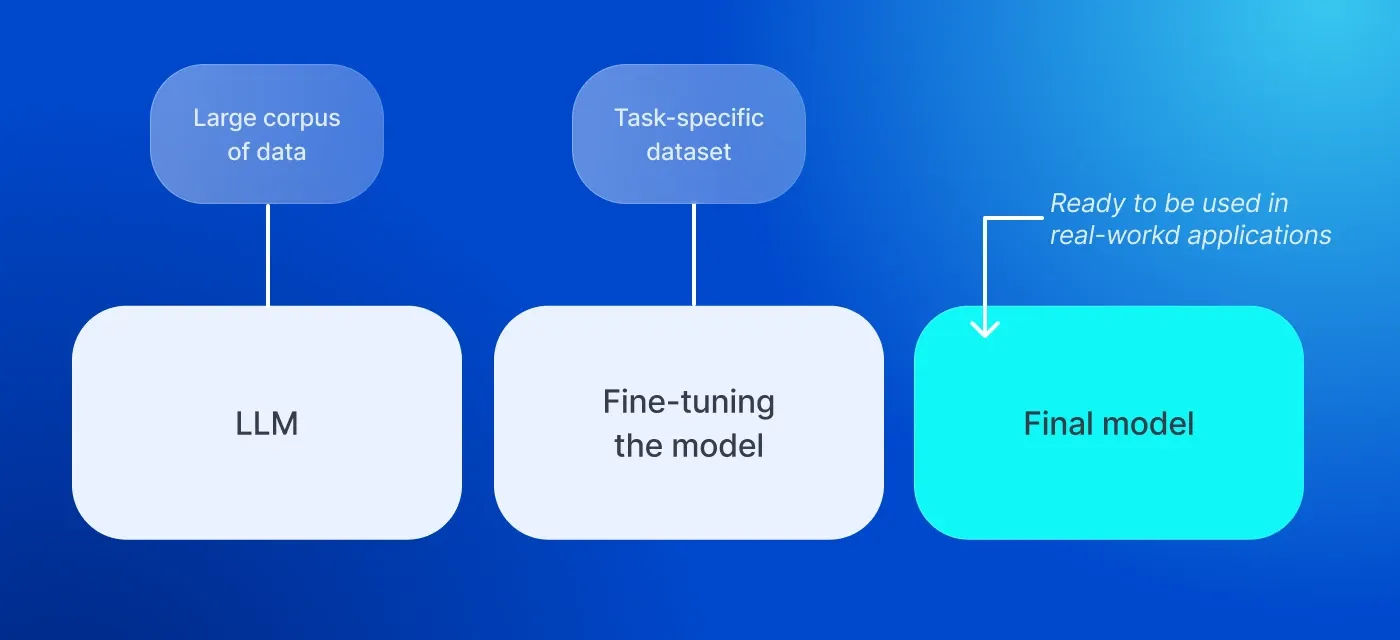

Fine-tuning is a training process of a pre-trained model, such as GPT (Generative Pre-trained Transformer), to make it deliver results specific to your domain.

How does fine-tuning work? First, you need to select a pre-trained model relevant to your task. Next, load data to use, for example, from the Hugging Face dataset library for a more focused training. The fine-tuning process itself involves feeding the model with the new data and inputs, comparing the model's responses with the desired ones, and handling errors using weight adjustments and iterative refinement.

What is prompt engineering?

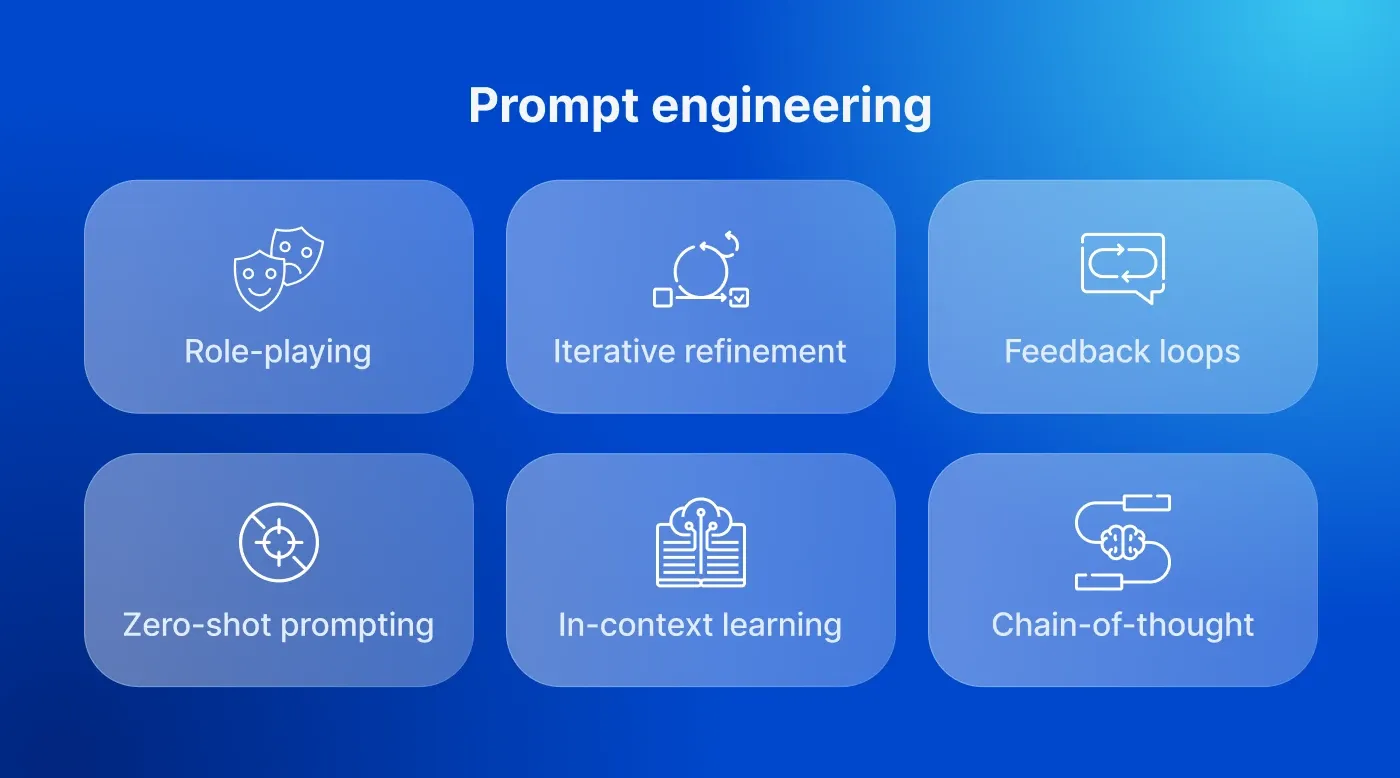

Prompt engineering is the process of getting the desired output from the LLM by precise prompting.

How does prompt engineering work? The most basic prompt engineering techniques, known even among non-technical users:

Role-playing

Iterative refinement

Feedback loops

More advanced techniques that require an in-depth understanding of LLM model algorithms are as follows:

Zero-shot prompting (providing the model with a task it hasn't seen during its training)

In-context learning (showing a few examples of desired outputs)

Chain-of-thought (guiding the model across the reasoning steps regarding the request).

RAG vs fine-tuning vs prompt engineering: What’s the difference?

What is the difference between RAG, prompt engineering, and fine-tuning? Let’s see how these three approaches differ in terms of their pros and cons, plus explore when and where to use each of them.

Based on the specifics of the techniques, each of them has advantages and drawbacks when it comes to achieving business purposes.

Implementation complexity

RAG systems are built by software engineers who know LLM designs and have expertise with vector databases and embeddings. The process requires quite a lot of effort, even though RAG doesn’t change how the LLM model works.

Fine-tuning involves dataset preparation, following a training pipeline, and model monitoring, which requires high technical expertise and knowledge of natural language processing.

Prompt engineering is quick to implement since it doesn't require any modification of the model’s infrastructure, but it does require prompt engineering and domain-specific expertise.

Cost

The RAG implementation cost consists of the setup expenses (document processing, embedding generation, and hosting). You’re also required to pay for LLM inputs and output generation. The final cost will depend on the number of external documents you use for RAG, data complexity, and the scope of LLM requests.

Fine-tuning cost depends on the model you use, storage price, training dataset size, and the number of requests per month. Computational and maintenance costs could be substantial, requiring multiple GPUs, since model training requires a large number of iterations. However, after training, it’s less demanding.

Prompt engineering doesn’t require training, making it ideal for low-budget projects aiming to improve LLM accuracy. In this case, you’ll need to pay for the number of inputs and outputs within the pricing of the model you choose.

For any of these methods, you should also add the labor cost, whether it is a prompt engineer or a development team skilled in LLMs.

Quality

RAG allows providing up-to-date domain-specific information since it relies on real-time data you give it. However, the RAG’s retrieval process slows down getting responses from the LLM. Also, note that RAG doesn’t influence LLM algorithms, it just adds new information to the existing ones. So, if you’re about to change the way LLM responds, you shouldn’t use RAG, but fine-tuning.

Fine-tuning involves additional specialized training for some specific domain, so it not only adds new information but also improves the style of LLM responses, if you have high-quality training datasets. At the same time, the LLM with fine-tuning delivers results faster than with RAG since it doesn’t search for information in the external sources but operates within its own database. However, you should be aware that fine-tuning may cause catastrophic forgetting when the model loses its previously acquired capabilities after learning from new focused training data.

Prompt engineering without changing the model and adding new data still allows you to significantly improve LLM responses if you use detailed prompts and advanced prompt engineering practices. The quality of responses is fully dependent on your prompt engineer’s skills and their domain-specific knowledge.

Data requirements

To get accurate responses using RAG, you need relevant and up-to-date data sources with a diverse range of documents, websites, APIs, databases etc. However, the more diverse your data is, the more effort you need to handle and process different formats for efficient retrieval strategies.

For the efficient supervised fine-tuning, you need hundreds to thousands of labeled training datasets tailored to your task. Some of the ready dataset collections you can use are HelpSteer, H2O LLM Studio, No_Robots, and Anthropic HH Golden.

Prompt engineering doesn’t require training data or any external data sources except for tools and prompt libraries that can improve your prompting, such as Priompt, Promptfoo, and PromptHub.

Update complexity

As new data becomes available, you need to refresh the embedding model, which in turn will affect which content is retrieved. You can periodically refresh the full model using new and existing data, or update the model in batches, reducing computational costs.

To update your fine-tuned model, you would need to start a full retraining process using new data. The process is similar to what you did with initial fine-tuning.

Updating data in prompt engineering implies adding new information right to the prompt using contextual details. For example, if you use in-context learning, update the examples provided; if it’s iterative refinement, provide feedback with the right answer for the initial outdated response.

| Characteristic | RAG | Fine-Tuning | Prompt Engineering |

|---|---|---|---|

| Implementation Complexity | Medium – requires setting up retrieval (vector DB, embedding, indexing) | High – requires dataset prep, training pipeline, compute | Low – quick to implement by crafting prompts |

| Costs | Medium to High – infrastructure, embeddings, storage, API usage | High – compute, training time, infrastructure | Low – mostly labor/time cost |

| Quality | Ensures up-to-date accurate responses with some latency | Improves or adapts model if done well | Depends entirely on base model + prompt effectiveness |

| Data Requirements | Medium – needs relevant documents in searchable format | High – needs large, clean labeled dataset | Low – doesn’t require training data |

| Update Complexity | Medium – requires updating embeddings | Slow – needs retraining for new data | Instant – change prompts as needed |

Use cases of RAG, fine-tuning, and prompt engineering

RAG is commonly used when you have some external source of data you want an LLM to work with. What are some areas of RAG exploitation?

Knowledge search. You can use RAG for searching information across your enterprise database, internal wiki, and research papers.

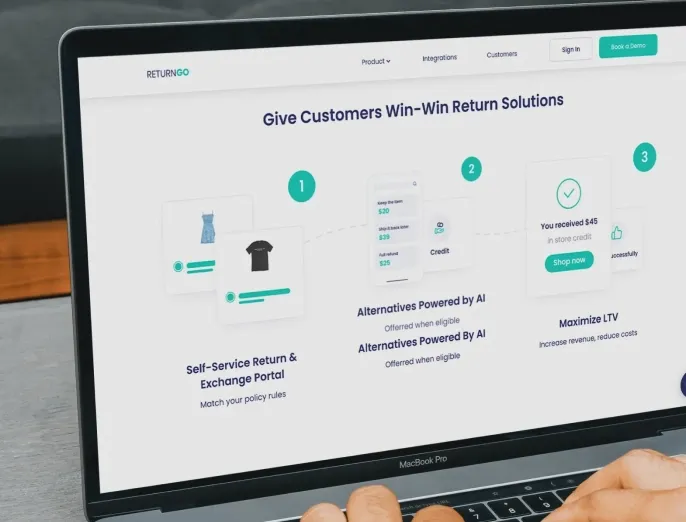

Customer support chatbots. RAG is great for creating AI chatbots with up-to-date information from your support database.

Educational tools. With RAG, you can ensure personalized learning, tailoring educational content to user needs.

Fine-tuning is used when RAG and prompt engineering are not enough to ensure brand consistency, in-depth domain-specific expertise, and accuracy, and reduce LLM hallucinations and biases.

Document processing. If you work in legal or another industry that requires high precision in document processing, fine-tuning can help you optimize your workflows, being trained on thousands of contracts or claims.

Complex calculations. Fine-tuning helps optimize your workflows that involve complex calculations that can’t be handled through prompting or causing errors.

Domain-specific apps. You can use fine-tuning to create efficient, high-performing applications for any industry that requires specialized language and automation.

Prompt engineering, in some form, is adopted across multiple domains, from software development to content creation, to speed up processes and boost efficiency. What are some of the tasks for which prompt engineering is essential?

Data analysis. By using prompts, you can summarize research, generate reports, and find valuable insights that help develop your business.

Workflow automation. Prompt engineering allows you to automate tasks, such as weekly status reports, document creation, and more.

Software development. Advanced prompt engineering is indispensable for code creation, debugging, and overall acceleration of the development process.

RAG vs fine-tuning vs prompt engineering: which one to choose

Let’s sum up which approach to choose when deciding to adopt improved LLM capabilities in your business.

RAG is ideal for apps that require using up-to-date information to control the data without retraining.

Fine-tuning is best for repeating tasks and deep personalization, requiring custom model behavior and more predictable outputs.

Prompt engineering is beneficial for experiments, MVPs, and simple automations when resources are limited.

If you’re looking for the right solution for your project, don’t hesitate to contact the DigitalSuits team. We have deep generative AI integration expertise and have succeeded in implementing LLM solutions for our clients.

Frequently asked questions

RAG vs fine-tuning: what is the difference and which is better?

Fine-tuning requires more time and resources to accomplish a project than if you use RAG. Plus, you need high-quality training datasets to get an efficient solution. So, if you’re limited with resources, but wish to use specialized information to process it with LLM, RAG is a preferred technique. On the other hand, if you’re planning to develop a tailored model that works in line with some specific requirements, you would better choose fine-tuning.

Prompt engineering vs fine tuning: what is the difference between and which is better?

Prompt engineering is easier to adopt than fine-tuning, but the choice ultimately depends on your purposes, timelines, and budget. If you need assistance to make the right decision, we recommend opting for our generative AI consulting services.

RAG vs prompt engineering: what is the difference and which is better?

Prompt engineering is the most straightforward and cheap way of improving the LLM responses. However, if you need to apply specific domain knowledge, RAG is a better choice. Looking for a specialist to handle AI complexities? Contact us, and we’ll find the right one for you.

Was this helpful?

0

No comments yet