Ever wondered how your favorite apps instantly suggest similar songs, recommend products you’ll love, or find the right images - even when you don’t know the exact words to search for? That’s what similarity search is. Instead of relying on exact matches, it provides results that closely resemble what you're looking for, including a design pattern, a document, or a recommendation.

The fundamentals of vector similarity search, its advantages over traditional techniques, real-world applications, benefits, and challenges will all be covered in this article. We’ll also provide actionable solutions and expert tips for successful implementation, helping you to make informed decisions about using vector similarity search in your projects.

TL;DR Learn about the benefits and use cases of vector similarity search to effectively incorporate it into your business

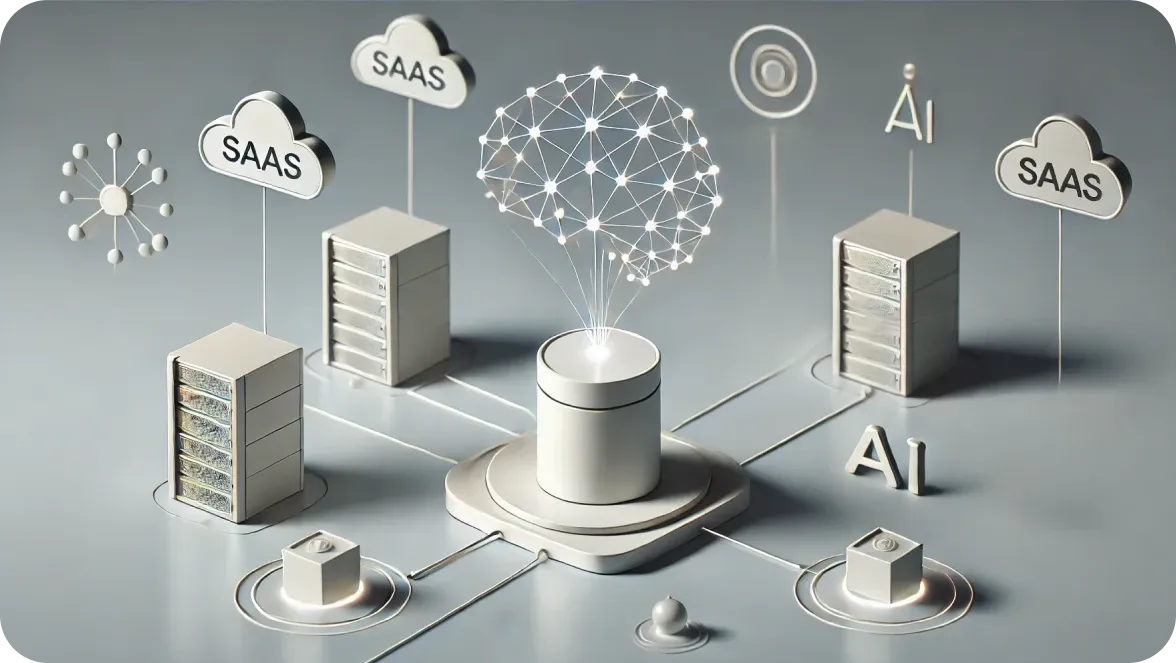

Vector similarity search is a method of finding items that are most similar to a query by comparing their vector representations, widely used in AI, machine learning, and recommendation systems.

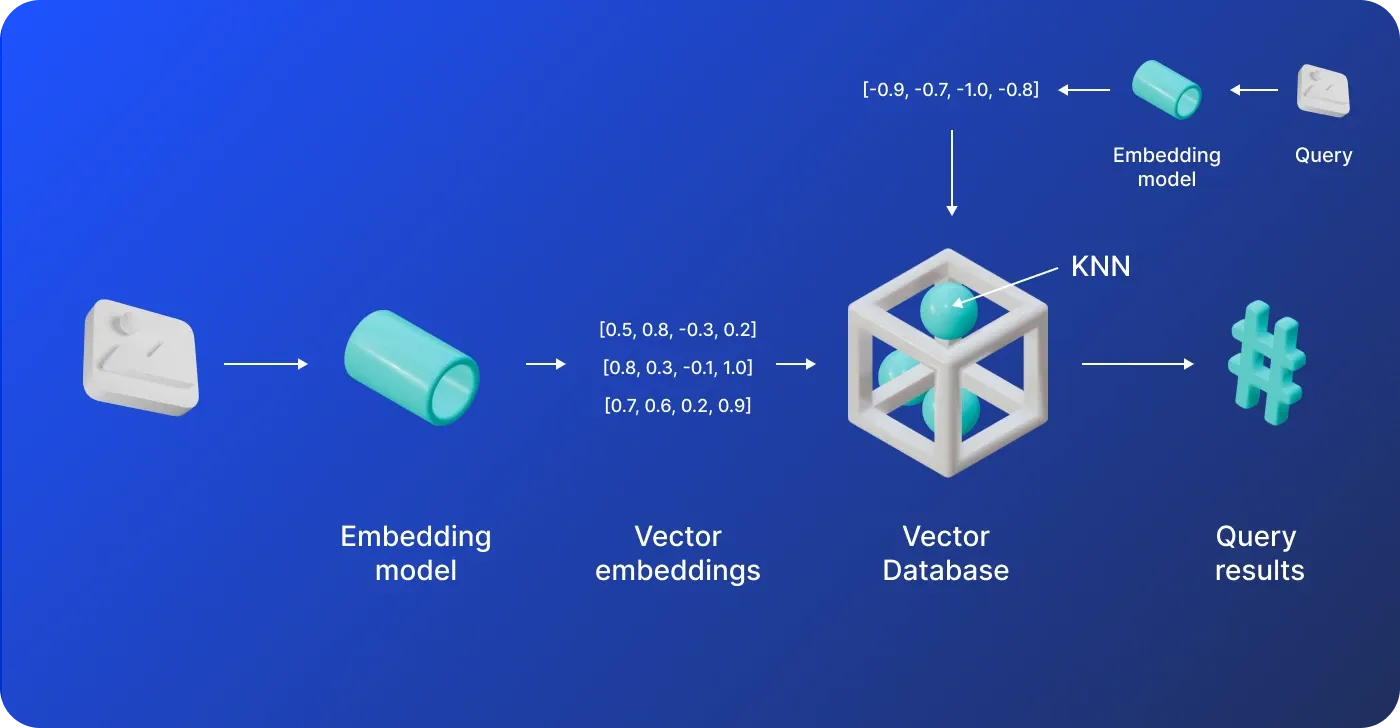

Key components of vector similarity search include vector embeddings, similarity scoring, and nearest neighbor algorithms, which work together to ensure accurate results. The process involves vector representation, indexing for efficiency, measuring similarity with distance metrics, querying and ranking, and post-processing for personalization.

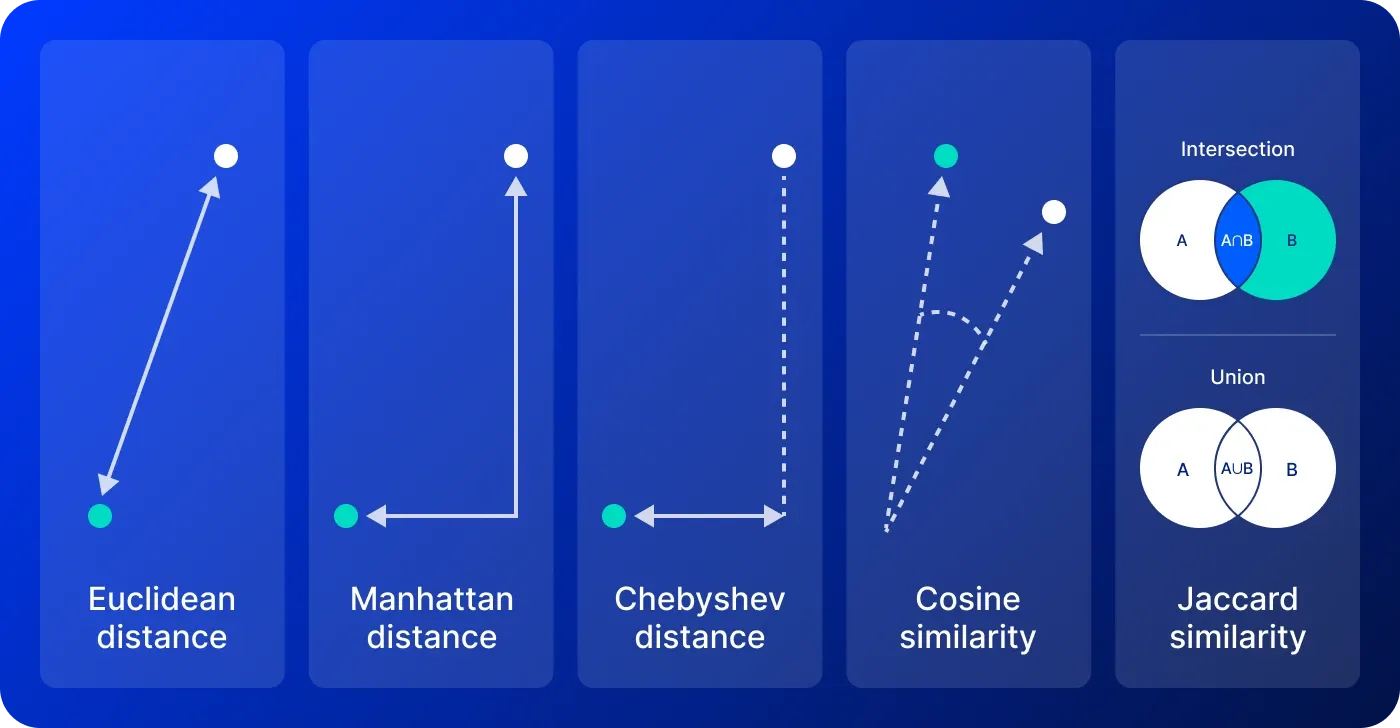

The main distance metrics used in vector similarity search are Euclidean, Manhattan, Chebyshev, Cosine, and Jaccard similarity. Each metric is tailored to specific applications, enabling efficient data comparison in high-dimensional spaces.

Definition of vector similarity search

Vector similarity search is a technique used to find items most similar to a given query by comparing their vector representations - mathematical descriptions of objects based on key features. By identifying the closest neighbors to a query -- for instance, related books based on their subjects or photographs based on their visual patterns – it drives search and recommendation algorithms. The technique is mostly used in fields like machine learning, information retrieval, recommendation systems, and AI-powered search engines.

The importance of vector similarity search

Vector similarity search improves our method of processing high-dimensional data. This approach makes dealing with large databases more efficient, accelerates all processes, and allows easier discovery of underlying insights to make better decisions. Vector search is introducing a significant change in information extraction. It is applied to vector embeddings, numerical representations of the data that capture the semantic meaning in a vector space.

Vector search can search objects without invoking the text, and this is necessary when you want to find information in new systems, especially when you are dealing with AI applications, which don't involve traditional search systems.

Similarity search vs. Traditional search

Similarity search retrieves results by matching similarity in vectors, which is perfect to work with images, text, and unstructured data. In contrast, traditional search relies on exact keywords and structured queries, which work best for text-based retrieval.

How vector similarity search works

Vector search engine uses vector embeddings, similarity scoring, and nearest neighbor algorithms to efficiently find and retrieve vectors that closely match a given query.

Vector embeddings

These are numerical representations of data that capture essential features and patterns. For instance, in natural language processing, techniques like Word2Vec and GloVe transform words into dense, low-dimensional vectors that reflect their meanings. In computer vision, images are converted into vectors using deep learning models, enabling the capture of visual characteristics.

Examples:

Word2Vec: Turns words into vectors that reflect their meanings.

GLoVE: Creates vectors from text by considering the global context of words.

Universal Sentence Encoder (USE): Generates embeddings for whole sentences, capturing their overall meaning.

CNNs (e.g., VGG): Produce embeddings for images, highlighting visual similarities.

Similarity scoring

Once the data points are represented as vectors, a similarity score is calculated to determine how closely related they are.

Common distance metrics used for similarity computation include:

Cosine similarity

Euclidean distance

Manhattan distance

Chebyshev distance

Jaccard similarity

Nearest neighbor algorithms

These algorithms are designed to efficiently search for the closest vectors to a query vector.

Popular methods include:

k-Nearest Neighbors (kNN) This algorithm is slow, with O(n²) complexity, because it calculates distances between every pair of data points.

Space Partition Tree and Graph (SPTAG) An efficient graph-based indexing structure that organizes vectors hierarchically, speeding up nearest-neighbor searches.

Hierarchical Navigable Small World (HNSW) A graph-based algorithm that connects vectors in a hierarchical way, enabling fast searches through randomization and local exploration.

Facebook’s similarity search algorithm (Faiss) Facebook’s library for quick similarity search and clustering of dense vectors, offering various indexing structures to balance accuracy and speed.

Key stages of the process:

- Vector representation

The process begins with transforming various data types - like text documents, images, or videos - into vector representations. For example, text documents may be encoded into vectors, where each dimension represents relevant words or concepts.

- Indexing for efficiency

After converting data into vectors, the next step is to organize these vectors in an index. This indexing helps speed up similarity searches and reduces the computational load, especially when working with large datasets. Data structures such as k-d trees, ball trees, or HNSW can be employed for effective indexing.

- Measuring similarity with distance metrics

The next crucial step involves selecting a distance metric to compute how similar two vectors are. The standard metrics include cosine similarity, Euclidean distance, Manhattan distance, Chebyshev distance, and Jaccard similarity. The choice of metric plays a significant role in the accuracy and effectiveness of the search.

- Querying and ranking

When a user submits a query vector, the algorithm compares it to the indexed vectors based on the chosen distance metric. The closest vectors are ranked and returned as search results, with the system prioritizing the most relevant matches, similar to how a search engine retrieves related documents.

- Post-processing for personalization

A post-processing step in numerous applications, particularly recommendation systems, refines the initial search results. Results may be filtered based on user preferences or past interactions, ensuring the outcomes are tailored to individual needs, while enhancing the user experience.

Vector similarity search distance metrics

Vector similarity search distance metrics are essential for comparing data in high-dimensional spaces, each tailored for specific types of analysis and applications. These metrics include:

Euclidean distance

This metric measures the straight-line distance between two points in high-dimensional space, similar to using a ruler.

Example: Assessing image similarity and measuring physical distances.

Use сases: Ideal for dense, continuous data where geometric distance matters.

Pros: Easy to calculate and interpret; performs well in low-dimensional spaces.

Cons: Can struggle with high-dimensional data due to the curse of dimensionality.

Manhattan distance

Commonly referred to as L1 distance, this metric measures the distance between vectors by summing the absolute differences of their corresponding dimensions.

Example: Used in pathfinding algorithms (such as A*) and urban planning.

Use cases: Best suited for grid-like data structures where movement is limited to specific directions.

Pros: Often more resilient to outliers compared to Euclidean distance.

Cons: Can be less intuitive for datasets that aren't based on grids and is sensitive to feature scaling.

Chebyshev distance

This metric measures the maximum coordinate difference between two vectors, which is helpful in scenarios like chess where diagonal movement is allowed.

Example: Chess algorithms, robotics navigation.

Use cases: Suitable for grid-based pathfinding with diagonal movement.

Pros: Simple to compute and effective in specific applications.

Cons: Less common in natural datasets; may not be intuitive for continuous data.

Cosine similarity

The metric focuses on the angle between two vectors, emphasizing direction over magnitude, making it ideal for textual data.

Example: Document similarity, recommendation systems for text.

Use cases: Great for high-dimensional sparse data where direction matters more than size.

Pros: Captures vector orientation well; not influenced by vector magnitude.

Cons: May perform poorly if vectors aren't normalized.

Jaccard similarity

It measures the intersection over the union of two sets, which helps evaluate shared attributes in binary data.

Example: Document similarity.

Use cases: Effective in evaluating text document similarity through shared terms, identifies similar customers in ecommerce by purchase history, and recommends movies based on rentals or ratings.

Pros: Simple and intuitive; effective for binary attributes.

Cons: Ignores term frequency and can be less effective for high-dimensional data.

Benefits of vector similarity search

Now, let’s explore the key benefits of vector similarity search.

Efficient searching

Vector similarity search facilitates the quick retrieval of similar items by utilizing advanced indexing structures, like HNSW graphs, along with optimized algorithms. This efficiency is essential for real-time applications such as recommendation systems, multimedia searches, and dynamic content delivery.

High accuracy

By focusing on the characteristics and connections between vectors, this approach uncovers patterns that keyword-based searches might overlook. This precision plays a vital role in personalized content because the satisfaction of the user depends on accurate suggestions.

Range query support

Users can use this searching method to find vectors within a predefined range. It's very useful when the program calls for intermediate values, like economic analysis of data, so the user can observe important points under predetermined parameters.

Flexibility

Because vector similarity search is not domain-specific, it may be applied to any kind of data, including text, images, and numbers. Its ability to use many distance metrics offers the benefit of selecting the most appropriate approach for each unique situation.

Personalized results

Vector similarity search delivers highly personalized results by comparing content vectors to user-specific vectors. This enables systems to provide tailored content, recommendations, and ads that appeal to specific preferences.

Multilingual capabilities

In complex multilingual scenarios, vector search engines can accurately retrieve information by grasping linguistic nuances through the use of LLMs. Their ability to perform cross-lingual information retrieval makes them essential for seamless knowledge sharing and collaboration across different languages.

When would you use a vector search?

Vector similarity search is used across many industries to efficiently find and compare similar data points. Here are key use cases of the vector similarity search.

Recommendations system

Vector similarity search algorithms play a key role in recommendation systems by identifying products with characteristics similar to those a user has already purchased or shown interest in. In online shopping, this similarity search improves user experience by suggesting products that align with customers' preferences, which increases engagement and sales.

Image and video retrieval

In the realm of multimedia, similarity search is essential for retrieving visually similar images and videos from extensive databases. Techniques like Content-Based Image Retrieval (CBIR) allow users to find images that match their queries based on visual attributes. Through effective content analysis and retrieval, this technology also powers video recommendation systems, which offer videos that are similar to ones a user has already seen, improving their video-watching experience.

NLP

In NLP, similarity search is a critical component in various text-based applications. Regardless of whether it’s grouping similar documents for topic modeling through document clustering or enhancing search engine results with semantic search, the ability to find semantically similar phrases elevates text comprehension. Furthermore, vector similarity search engines aid in plagiarism detection by identifying duplicate or highly similar texts, ensuring the integrity of written content.

Fraud detection

Similarity searches are now also widely used for fraud detection on a daily basis to detect patterns and suspicious behaviour. In contrast and matching similar equivalent transactions, the technology identifies cases of fraud. For instance, a payment can be flagged for investigation by comparing it to past criminal payments, essentially equipping businesses with an effective tool against money laundering.

Anomaly detection

Another key use of similarity search methods is finding anomalies in big data sets. The program can flag any anomalies or outliers that must be researched further by identifying data points that deviate from the norm. It is especially important in manufacturing or information security, where catching the strange trends early on can prevent more serious issues from arising.

Clustering

Clustering, an essential technique in data analysis, often leverages similarity search to group similar data points. By identifying the nearest neighbors in high-dimensional spaces, clustering algorithms efficiently partition data into meaningful segments. This approach is widely applied in customer segmentation, image segmentation, and various tasks that involve organizing similar instances to glean insights.

Healthcare and genomics

In healthcare and genomics, similarity search is invaluable for medical diagnosis and genetic research. By comparing patient data and medical imaging, healthcare professionals can identify similar cases that assist in diagnosis. In genomic research, finding similar genetic sequences enables scientists to study variations and their implications for human health, contributing to advancements in medicine.

Social media analysis

Social media platforms utilize vector similarity search to suggest new friends or connections based on shared interests and activities. By analyzing users' connections and preferences, these algorithms facilitate meaningful interactions, enhancing user engagement and helping to form robust online communities.

Content filtering and search

Similarity search is helpful in content filtering and search. It turns documents, articles, or internet pages into vectors, which makes it faster to pull up information that matches what users are looking for. So, if you need news that fits what you like or want to block some content, vector search makes sure you get the right info that feels just for you.

Biometric identification

Biometric systems are now using algorithms that focus on matching one to another. Regardless of whether scanning a fingerprint or analyzing a face photo, they're comparing it to a group of individuals in the database. The same goes for voice recognition systems that listen to and identify a person by the sound of their voice.

Document and text search

When it comes to document and text search, vector search algorithms facilitate the discovery of related content. By enabling users to find documents with similar themes or information, these algorithms are particularly beneficial for researchers and professionals searching for related articles or studies. This capability streamlines research processes and enhances knowledge acquisition.

Audio, video, and image search

Image and video algorithms identify similar-looking content depending on what the users are looking for. In audio application, similarity search can detect songs similar to a particular track, making music discovery and exploration easy, or identify audio imperfections in big data sets, ensuring quality.

Vector similarity search challenges and ways to solve them

Here, we break down the key challenges of vector similarity search and practical ways to solve them.

Scalability

Managing massive datasets with billions of items requires advanced techniques and substantial computational resources. Traditional systems designed for exact matches often struggle to handle such scale efficiently.

Trade-off between accuracy and efficiency

Higher-dimensional vectors retain more information, improving search accuracy, but come at the cost of slower processing. Approximate nearest neighbor (ANN) techniques speed up searches but sacrifice some precision, requiring a balance between speed and accuracy.

High-dimensional data

The “curse of dimensionality” leads to sparse data in high-dimensional spaces, making similarity calculations less effective and computationally expensive as vector search algorithms struggle to find patterns in vast multi-dimensional spaces.

Choice of distance metric

Selecting the right distance metric is critical as different metrics yield varying results depending on the data type and application. A poor choice can lead to suboptimal search outcomes, reducing accuracy and effectiveness in similarity retrieval.

Results interpretability

Understanding why two vectors are deemed similar can be challenging, especially when similarity is based purely on mathematical measures. This limits insights from search results without additional analysis.

Handling vague and varied queries

User queries can range from generic to highly specific. Capturing the semantic nuances of these queries requires sophisticated algorithms that go beyond simple keyword matching.

Data distribution and skewness

Non-uniform or imbalanced data distributions can bias search results or degrade query performance, making it essential to design algorithms robust to such variations.

Indexing and storage requirements

Building efficient indexing structures for high-dimensional spaces incurs significant storage overhead and computational costs. Balancing indexing efficiency with storage constraints is a persistent challenge.

Privacy concerns

Vector search systems often involve sensitive data. Improper handling of this data can lead to privacy violations or data breaches, especially when APIs or external services are used.

Ways to solve challenges:

Dimensionality reduction

Techniques like Principal Component Analysis (PCA) or t-SNE reduce dimensionality while preserving meaningful features, mitigating the curse of dimensionality and improving efficiency.

Data preprocessing

Normalize or scale data to ensure consistent feature scaling during similarity computations. Proper preprocessing also handles outliers and missing values, enhancing overall accuracy.

Domain-specific distance metrics and adaptive distance metrics

Design metrics tailored to specific domains or use adaptive metrics that dynamically adjust based on data characteristics, ensuring better alignment with application needs.

Trade-off strategies and approximate indexing

Adopt ANN methods like HNSW or ScaNN that balance speed, accuracy, and storage requirements. These methods enable efficient searches in large datasets while maintaining acceptable precision levels.

Neural hashing

Use neural networks to generate compact binary codes from high-dimensional vectors. This reduces storage needs and accelerates similarity searches while preserving essential relationships between vectors.

By addressing these challenges with tailored solutions, vector similarity search systems can achieve scalability, efficiency, and accuracy across diverse applications.

Tips for successful similarity search implementation

Here are actionable tips to ensure successful implementation of similarity search.

Data cleaning and normalization

Start your similarity search project by cleaning and normalizing your data. Cleaning involves going through your dataset to remove noise, mistakes, and any insignificant details. This makes sure you work with high-quality, meaningful data. Normalization puts your data on the same scale, which allows for fair comparisons without distorting the value ranges.

Using methods like z-score normalization or min-max scaling helps you get consistent results and reduces biases. Good data preprocessing is key, making your similarity searches more accurate and efficient.

Algorithm configuration and optimization

Customizing algorithms like k-NN or HNSW requires careful tuning based on data type and size. Adjust settings to balance performance and precision, ensuring you get the best outcomes possible.

Algorithms can evolve over time, so continual monitoring and testing are essential. Regularly reconfigure and update your models to keep them optimized and responsive to changes in your data scene.

Data sharding and partitioning

To improve scalability and productivity, break down big datasets into smaller, easier-to-handle chunks for parallel processing, which results in quicker query responses. Sharding spreads data across several storage instances or servers, while good partitioning makes sure these subsets are spread out.

This method doesn't just cut down on computational work; it also makes the whole system run better making it crucial for big applications that deal with high-dimensional vectors. As your data gets bigger, these strategies will help you scale up.

Hardware acceleration

Using GPUs and TPUs can speed up processing, as these accelerators handle parallel computations much better than regular CPUs.

Optimize your software to tap into these hardware capabilities, which can improve throughput and cut down on lag time. This matters a lot for real-time apps and big data environments where performance makes all the difference.

High-dimensional data handling

Finally, effectively managing high-dimensional data is essential for maintaining efficiency and accuracy in your searches. Utilize dimensionality reduction techniques like PCA (Principal component analysis) or LDA (Linear discriminant analysis) to simplify your data structure while preserving crucial information.

By combining these techniques with advanced algorithms, you can tackle complexity efficiently while ensuring optimal performance. Regularly evaluate your strategies to adapt to evolving data and query types, keeping your similarity search implementation robust and effective.

Wrapping up

Applying vector database similarity search enables your business to deliver smarter, more relevant insights that drive engagement and growth. As data evolves, adopting these techniques will be essential for remaining competitive and innovative.

Contact our team if you need more details or consultancy on implementing vector database similarity search into your business to improve search accuracy, upgrade recommendation systems, and unlock deeper insights from your data. The specialists at DigitalSuits excel in AI software development services - don’t hesitate to benefit from it now.

Frequently asked questions

What is the difference between Elasticsearch and vector search?

Elasticsearch is mainly built for full-text search and structured data retrieval using keyword techniques, while vector search specializes in finding similar items based on vector representations.

Can we use Elasticsearch as a vector database?

Yes, you can use Elasticsearch as a vector database similarity search. Elasticsearch has introduced features that support vector embeddings, allowing users to perform similarity searches based on vector representations. However, it may not be as optimized for purely vector-based operations as specialized vector databases, which are designed specifically to handle large-scale vector searches more efficiently.

Does Google use vector search?

Yes, Google employs vector search techniques, particularly in its search algorithms and services like Google Search and Google Photos.

What is an example of a vector search?

An example of a vector search is a recommendation system that suggests similar products based on user preferences by comparing their vector representations.

Is vector search the same as semantic search?

No, vector search is not the same as semantic search. While they are related, semantic search focuses on understanding the meaning and intent behind queries, using knowledge graphs and complex NLP. In contrast, vector search relies on mathematical representations and similarity calculations to find similar items in a high-dimensional space.

Was this helpful?

0

No comments yet