Ever wondered how AI could come up with exactly correct and relevant answers in no time? What is RAG in AI, and how does it differ from the traditional model of Generative AI? More importantly, how can it advance your AI solutions to improve efficiency and customer satisfaction?

In this article, we’ll plunge into these questions:

The RAG AI definition

How retrieval augmented generation works

The architecture of RAG

Its key benefits and challenges

RAG practical use cases

You will clearly understand how RAG can be effectively implemented in your company to transform the use of Generative AI, driving business scaling, growth, and increased sales.

TL;DR: RAG GenAI enhances AI with real-time information

Retrieval-Augmented Generation (RAG) improves AI responses by retrieving relevant, up-to-date information from external sources.

Benefits of RAG GenAI include accurate responses, reduced hallucinations, domain-specific outputs, and cost-effective deployment.

Challenges and limitations involve integration complexity, data quality, and synchronization delays.

Use cases range from customer support, virtual assistants, and personalized recommendations to medical diagnosis, making RAG a versatile tool in various industries.

What does RAG stand for in AI?

Retrieval-Augmented Generation (RAG) is a method in Generative AI that enhances content generation by retrieving and integrating the most relevant, up-to-date information from external databases or knowledge sources. Unlike traditional models that rely solely on pre-trained knowledge, RAG dynamically pulls in external data to produce more accurate and contextually relevant outputs.

How does retrieval augmented generation work?

After understanding the RAG meaning in generative AI, the next logical question is, "How does RAG work?" Retrieval-Augmented Generation (RAG) systems operate by linking user prompts with relevant external data, pinpointing the piece of information that is most semantically aligned with the query. This relevant data serves as context for the prompt, which is then fed into a language model (LLM) to generate a precise, contextual response.

This retrieval augmented generation diagram shows how the system combines external information with user prompts to provide appropriate outputs:

In essence, retrieval augmented generation works by first gathering and processing relevant data from external sources, breaking it down into manageable chunks, embedding it into vector representations, processing the user query to identify pertinent information, and finally generating a detailed and accurate response using a language model. What sets RAG apart from traditional LLMs is its ability to utilize both the user’s query and the up-to-date data simultaneously, rather than relying solely on the user input and the pre-existing training data.

Step 1: Data gathering

The first step in implementing RAG GenAI is gathering all the necessary data for your application. For instance, if you’re developing a virtual health assistant for a healthcare provider, you would collect patient care guidelines, medical databases, and a compilation of common patient inquiries.

Step 2: Data breakdown

Next comes data chunking, where you divide your information into smaller, manageable segments. If you have a comprehensive 200-page medical guideline, you might break it down into sections that address different health conditions or treatment protocols. This method ensures that each data chunk is focused on a specific topic, making it more likely that the retrieved information will directly answer the user’s question. It also boosts efficiency, allowing the system to quickly access the most relevant pieces without wading through entire documents.

Step 3: Document embeddings

Once your data is chunked, it’s time to convert it into vector representations, known as document embeddings. This involves transforming the text into numerical forms that capture its semantic meaning. The document embeddings help the system comprehend user queries and match them with relevant information based on meaning rather than a simple word-for-word comparison. This approach ensures that the responses provided are pertinent and aligned with what the user is asking.

Step 4: Query processing

When a user submits a query, it also needs to be converted into an embedding. It’s crucial to apply the same model for both document and query embeddings to maintain consistency. Once the user query is embedded, the system compares it with the document embeddings to identify which chunks are most similar to the query embedding. Techniques like cosine similarity and Euclidean distance are used to find these matches, ensuring that the retrieved chunks are the most relevant to the user’s inquiry.

Step 5: Output formulation with an LLM

Finally, the selected text chunks, along with the original user query, are processed by a language model. The model combines this information to generate a coherent response tailored to the user’s needs.

To streamline this entire process of generating responses with the large language models, you can utilize a data framework like LlamaIndex. This solution helps you efficiently manage the flow of information from external data sources to language models like GPT-3, making it easier to develop your own LLM applications.

Retrieval augmented generation architecture

The RAG architecture consists of two key components: a retriever and a generator. The two-part approach boosts text generation by integrating retrieval capabilities right into the model. This method allows for responses that are both well-informed and contextually relevant, thanks to verified data.

The retriever and generator components collaborate seamlessly to ensure high-quality and accurate replies. This makes RAG architecture large language model a valuable tool for applications like AI chatbots and question-answering systems, which require advanced language understanding and effective communication.

Let’s dive deeper into how each component plays a crucial role in the RAG system’s functionality and explore the RAG model architecture diagram, showing how the retriever gathers data for the generator to produce accurate responses.

The retriever component

Function: The retriever is responsible for locating relevant documents or pieces of information to help address a query. It takes the input query and searches a specified database to find information that can assist in forming a response.

Types of retrievers:

Dense retrievers: These types utilize neural network techniques to generate dense vector embeddings of the text. They are particularly effective when the focus is on understanding the underlying meaning of the text rather than matching the exact words, as these embeddings capture semantic similarities.

Sparse retrievers: These ones rely on term-matching strategies, such as TF-IDF or BM25. They are particularly adept at locating documents that contain exact keyword matches, making them valuable for queries with unique or rare terms.

The generator component

Function: The generator is a language model that creates the final text output. It incorporates both the input query and the context retrieved by the retriever to produce a coherent and relevant response.

Interaction with the retriever: The generator collaborates with the retriever; it depends on the context provided by the retriever to shape its responses. This connection ensures that the output, besides being plausible, is also very detailed and accurate, adding quality to the response.

The retrieval augmented generation (RAG) architecture integrates the generation and retrieval together seamlessly, resulting in improved answers through direct access to information and advanced language modeling.

Key benefits of RAG AI

Answering "What is the main advantage of retrieval-augmented generation (RAG)?" is challenging, as RAG offers numerous benefits for improving LLM capabilities. Let’s dive into how retrieval augmented generation (RAG) can refine your model’s outputs and learn its primary benefits.

#1 Up-to-date information

Unlike traditional models that rely on outdated training data, retrieval augmented generation uses current sources to deliver accurate and timely responses.

#2 Minimized inaccuracies

By prioritizing relevant external knowledge, RAG reduces the chances of the model providing incorrect information (i.e., hallucination). Plus, it can even include citations from original sources, making it easier for users to verify the content.

#3 Industry-relevant responses

RAG enables responses that are customized to the unique context or proprietary data of the organization, ensuring answers are more pertinent and aligned with specific needs.

#4 Resource-saving deployment

Implementing RAG AI offers a simpler and more cost-effective approach to utilize large language models with domain-specific data, as it eliminates the need for extensive customization. This is especially helpful when new data needs to be integrated frequently.

#5 Increased data confidentiality

With RAG, organizations maintain control over their data, enhancing privacy. It pulls in real-time information from carefully selected sources rather than depending on centralized databases, helping to keep sensitive data safe. This approach not only manages privacy risks but also ensures compliance with regulations like GDPR or HIPAA, making it a more privacy-focused way to generate AI-driven responses.

#6 Expanded LLM memory capabilities

RAG overcomes the information capacity limitations of traditional LLMs. While traditional models have a limited memory (known as “Parametric memory”), RAG introduces “Non-Parametric memory” by tapping into external knowledge sources. This greatly expands the knowledge base, allowing for more comprehensive and accurate responses.

#7 Verified and transparent sourcing

Models integrated with retrieval augmented generation can provide sources for their answers, boosting transparency and credibility. Users can easily access the information behind the LLM’s responses, fostering trust in AI-generated content.

#8 Decreased bias and error

By relying on verified and accurate external data sources, LLMs enhanced with RAG retrieval mechanisms greatly reduce the likelihood of users receiving incorrect responses to their queries. Thai helps ensure the generated content aligns more closely with factual information, making the AI system more reliable.

Challenges and limitations of RAG AI

Integrating information retrieval with natural language processing through RAG applications can be incredibly beneficial, but it’s not without its challenges. In this section. We’ll dive into some of the hurdles you might face when implementing these applications and explore practical ways to tackle them.

Integration complexity

One of the main challenges with the RAG model is its integration complexity, especially when dealing with different types of data sources. To achieve consistency, it’s crucial to preprocess the data for uniformity and standardize embeddings, ideally by using separate modules for each data type.

Growth in data volume

As your data volume grows, you might notice that the efficiency of your RAG AI system starts to drop, especially during tasks like generating embeddings and retrieving information. To keep everything running smoothly, consider distributing the computational load across multiple servers, investing in strong hardware, caching commonly queried data, and implementing vector databases for faster retrieval.

Data precision

The success of the RAG system depends on the quality of the data it uses. Relying on poor data sources can lead to inaccurate responses. Therefore, it’s essential to focus on thorough content curation and involve subject matter experts to refine the data before bringing it into the system.

Syncing difficulties

Maintaining an up-to-date retrieval database requires a robust synchronization system that can handle frequent updates without compromising performance.

Data retrieval faults

Retrieval errors can lead to hallucinations in the RAG model, often stemming from mismatches between queries and relevant documents, resulting in irrelevant or low-quality results.

Response delay

Retrieval processes can sometimes cause delays, which can slow down the system’s response time. This is particularly important for applications that rely on real-time interactions, like chatbots.

How to use retrieval augmented generation across various industries

Here are primary use cases showing how RAG AI enhances efficiency and user experience across different sectors:

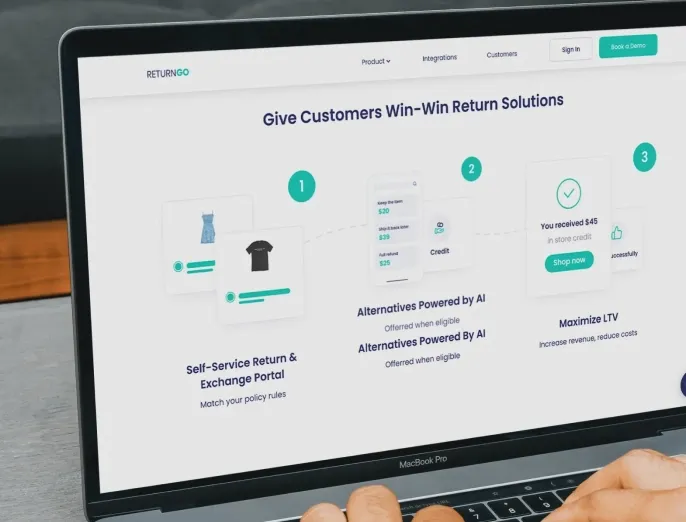

Support solutions

RAG-enabled chatbots provide accurate responses by retrieving product details and support documents.

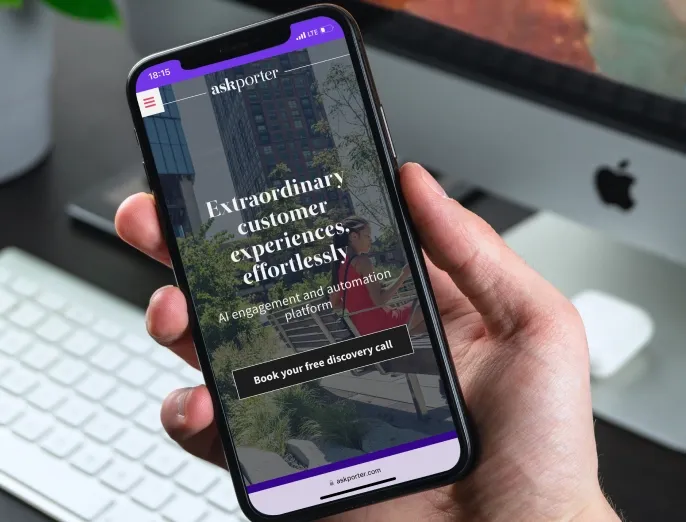

Personal assistants

Virtual assistants use RAG to fetch real-time data, making conversions more relevant with updates on weather and news.

Content creation

Journalistic AI tools utilize RAG to collect current facts, streamlining content generation and reducing editing needs.

Education

Educators and edtech platforms use RAG to create personalized lessons and provide detailed explanations, offering students diverse perspectives and context for complex topics.

Analytical research

AI supports researchers by summarizing academic papers and updating information from various sources.

Healthcare

RAG systems assist medical professionals by accessing the latest research and patient records for informed diagnoses and treatments.

Translation services

RAG enhances translations by considering context and cultural nuances, resulting in more accurate outputs.

Data interpretation

Integrating LLMs with specific data improves response reliability and accuracy, minimizing generic content.

Summary generation

RAG efficiently summarizes extensive reports, helping executives access critical findings quickly.

Custom recommendations

RAG provides subtle product recommendations based on customer data analysis, improving user experience and increasing revenue accordingly.

Business intelligence

RAG simplifies analyzing trends in business documents for an organization to gain insight effectively and thereby conduct better market research.

Semantic matching

Similarity search retrieves relevant information by comparing the semantic meaning of a user’s query with stored data, enhancing the response quality.

Conclusions

Understanding RAG in GenAI is vital for business leaders looking to integrate AI into their operations, as it improves accuracy and efficiency through up-to-date data. By grasping the RAG AI meaning, its benefits, and use cases, businesses can open up new avenues for growth and innovation. This can completely change how they utilize data to make decisions and improve customer experiences. If you need help or recommendations for implementing RAG AI in your company, feel free to contact our team - we’d love to discuss the details with you.

Frequently asked questions

Which industries would take advantage of RAG most?

Different industries like customer services, healthcare, education, and ecommerce can take advantage of RAG AI. There isn’t an industry that relies on real-time information that doesn’t need RAG’s capabilities.

What are the initial steps to implement RAG AI in my business?

Start by looking through your existing systems and data sources. Learn which RAG frameworks and tools are available, and feel free to contact us if you need help. Our professionals will assist you with establishing a straightforward implementation process.

Can RAG be used in combination with other AI technologies?

Yes, certainly! RAG corresponds with other AI solutions like chatbots or predictive analytic tools to enhance their functionality and deliver more holistic customer service.

Was this helpful?

0

No comments yet