If you use audio recordings in any business area, you might have been looking for a tool that automatically generates transcripts. Such a tool could be applicable in multiple ways, from creating subtitles for a vlog to transcribing interviews. However, a standalone solution could be insufficient for business daily routine. That’s where OpenAI Whisper may come in handy.

You can incorporate this pre-trained model into your business infrastructure to automate speech-to-text tasks, unleashing tons of manual work. This article guides you through Whisper's capabilities and benefits for business. We also explain how the model works and how to use Whisper AI for projects with the best outcome.

TL;DR: OpenAI Whisper speech-to-text model for transcription and translation

Whisper is an ASR model trained on diverse audio datasets to recognize and transcribe human speech.

OpenAI offers substantial customization opportunities since Whisper is primarily intended for further development of domain-specific applications.

Whisper's most prominent features are its multilingual support, high accuracy, ability to work with noisy environments, and adaptability to various dialects, accents, and speaking styles.

Whisper is applicable for multiple use cases, from video captioning to voice verification and linguistic research.

The model has its limitations, such as less support for uncommon languages and error occurrence when working with very specialized vocabularies.

The best practices for overcoming Whisper's limitations are audio post-processing, quality audio input, fine-tuning, combining with other AI models, adjusting to user feedback, and setting up confidence thresholds.

What is OpenAI Whisper

Whisper is an advanced automatic OpenAI speech recognition (ASR) model trained on 680,000 hours of supervised audio data from the web. English audio and matched English transcripts are 68% of all data, 18% is non-English audio with English transcripts, and 17% is non-English audio with non-English transcripts. Non-English data represents 98 different languages.

The model is mainly purposed to identify human speech in a recording and convert it into a written text. However, it is also used for accurate translations from multiple languages to English.

OpenAI released the Whisper AI model in September 2022, making it available for free. The model is primarily intended for AI researchers and developers looking to tailor Whisper speech-to-text capabilities to specific use cases. So you won’t find an in-browser application like OpenAI ChatGPT. Instead, you should use an OpenAI speech API or the open-source versions available on GitHub to integrate it into your project.

Is OpenAI Whisper a model or a system?

OpenAI claims Whisper is a neural net, as well as an ASR system and a set of AI models. What is the difference between these terms and how are they connected to Whisper?

Whisper is primarily an AI/ML model for speech-to-text recognition, which means it uses collections of audio datasets to learn from them and draw conclusions.

At the same time, Whisper is a neural network since it’s an AI model that mimics brain processes for learning. That is, its interconnected artificial neurons receive information from each other and process it through some non-linear function. Such neural networks are trained through an empirical risk minimization method. It's optimizing the network to minimize the difference between actual target values in the dataset and predicted output.

You can also view Whisper as an ASR system since there is an infrastructure around the speech-to-text model that supports AI and machine learning workloads.

Finally, Whisper includes six models of different sizes, speed, and accuracy: tiny, base, small, medium, large, and turbo. You can choose one based on the computational resources and the trade-off between speed and accuracy. The tiny model is the fastest but least accurate, while the large version is highly accurate but needs more computational resources.

How does OpenAI Whisper work

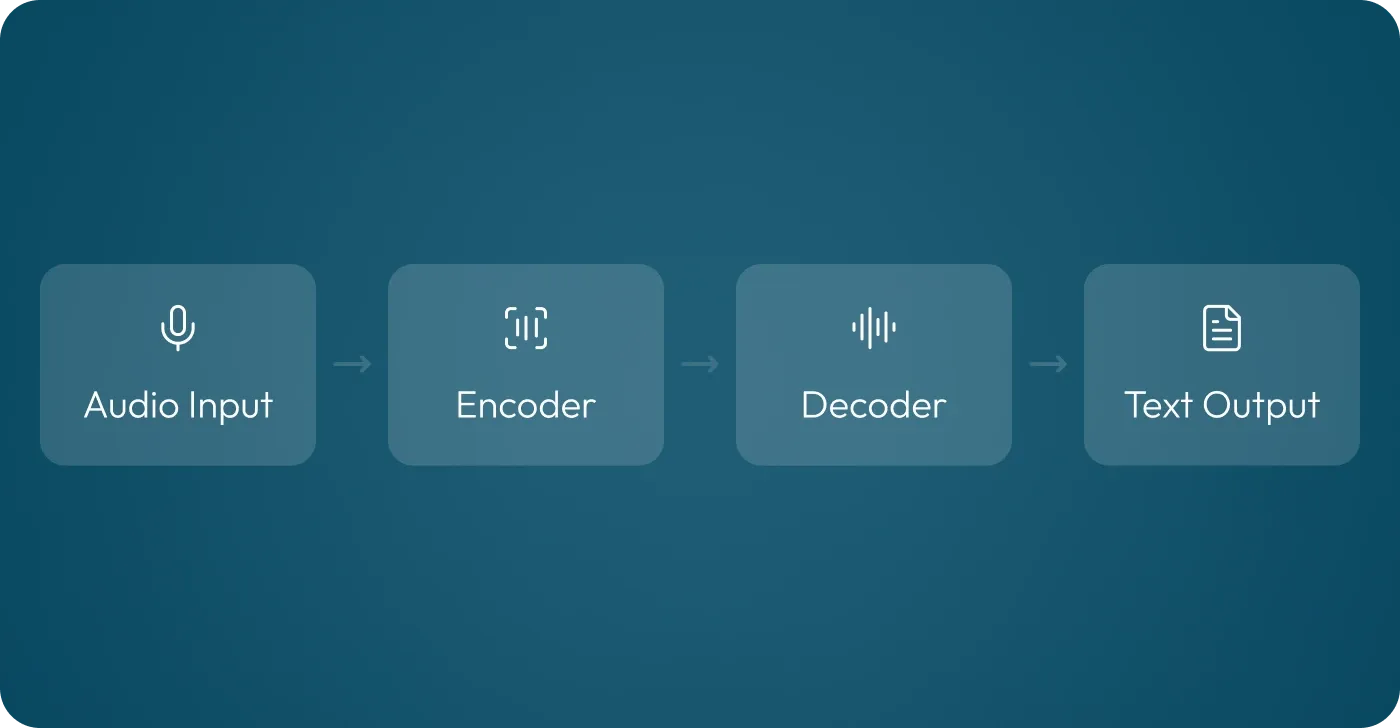

Unlike traditional speech recognition models that require training multiple models with different functionalities to analyze and decode audio, the Whisper AI model operates like a single neural network, reducing development time and costs. Basically, it has an encoder-decoder transformer architecture, where the encoder processes the audio input and the decoder generates the text output.

How does Whisper AI work in detail? Once the system receives audio input, it splits the recording into chunks and converts them into log-mel spectrograms that show frequency over time. The encoder receives the spectrograms and transforms them into vector representations – embeddings. It uses neural networks to understand the speech structure and its context.

The decoder, in its turn, takes the embeddings and generates text. It uses the context provided by the encoder to predict the next word in the sequence. The decoder considers the previous tokens (subwords or words, depending on the tokenization method) and the entire audio input to determine output probabilities for each next token. Finally, the tokens are converted into readable text. Whisper can also infer punctuation from the audio input, though combining it with language models improves accuracy.

What are OpenAI Whisper features?

The OpenAI Whisper model comes with the range of the features that make it stand out in automatic speech recognition and speech-to-text translation.

Multilingual support

Whisper handles different languages without specific language models thanks to its extensive training on diverse datasets. It supports transcription in up to 98 languages and translates speech from up to 30 languages into English.

High accuracy and low-latency

Whisper ensures high accuracy in transcribing audio recordings. Its word error rate (WER) is around 8%, while transcription speed is 10 to 30 minutes for a one-hour recording.

Translation from scratch

The model translates audio inputs without training for each language pair, using its broad training data to achieve this capability.

Handling of speech variability

The best part is that Whisper transcribes speech with different accents and even speech impediments. It can also handle switching between languages in a conversation, different dialects, and various speaking styles.

Robustness to noise

OpenAI Whisper handles various audio qualities, including noisy environments. It can work with raw audio, reducing the need for audio preprocessing steps like noise cancellation.

Context understanding

While primarily a speech-to-text model, Whisper’s training allows it to understand context in speech to some extent, improving the coherence and accuracy of the output.

Integration capabilities

Given its open-source nature, Whisper can be integrated into existing systems or used to build new speech recognition applications with relative ease. It also has an API that provides two OpenAI speech-to-text endpoints – transcriptions and translations – based on the open-source large-2 Whisper model.

What is OpenAI Whisper used for

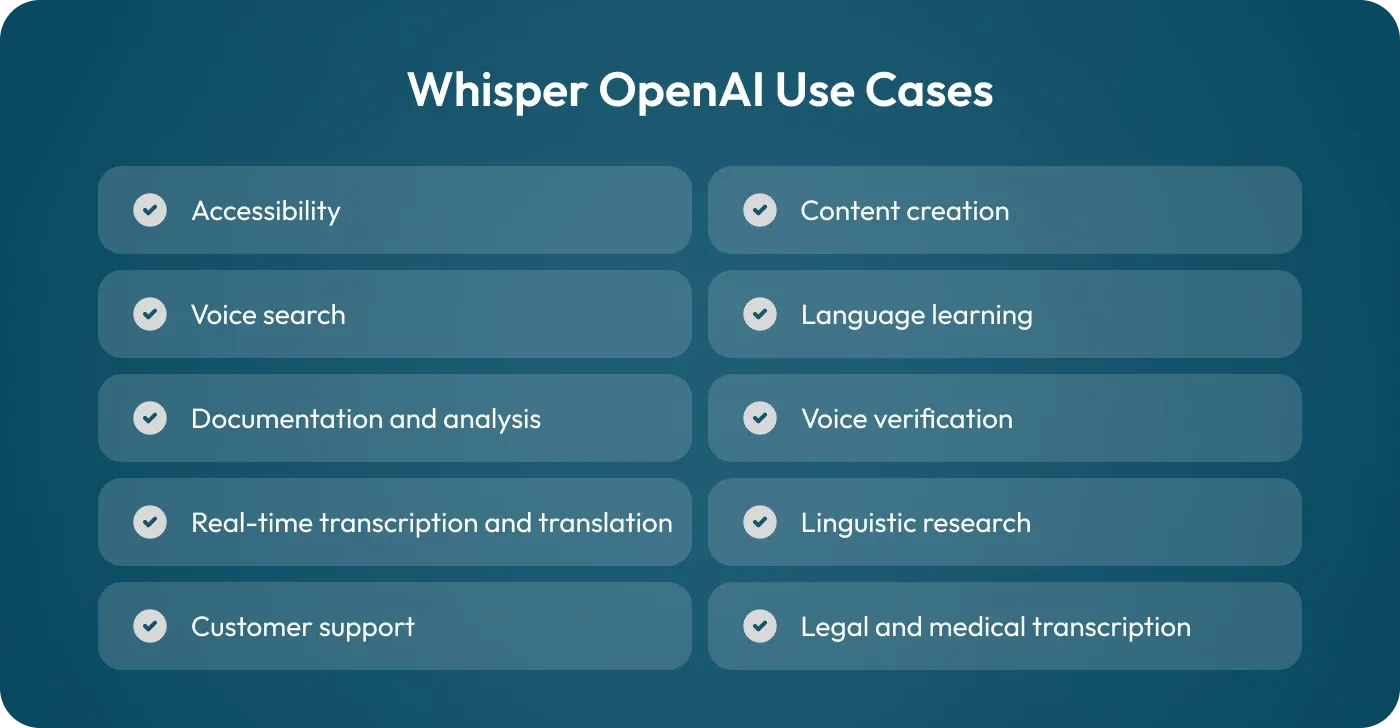

OpenAI Whisper has as many applications for businesses as you can imagine. We compiled a list of the most common use cases to spark ideas for your own business.

Accessibility

Making your content more accessible to individuals with hearing impairments or those who prefer reading over listening will let you comply with accessibility regulations and attract more people to your project. Whisper is what you need to provide accurate transcriptions for your content.

Voice search

Given that roughly 30% of internet users aged 16-64 worldwide use voice search each week, you can benefit from implementing Whisper for on-site voice search. It can make your website more inclusive, especially if you enable multilingual translation, allowing users to search in their native language.

Documentation and analysis

Converting spoken language into written text across multiple languages is helpful for transcribing meetings, lectures, interviews, or any speech for documentation, training, and analysis.

Real-time transcription and translation

Whisper doesn’t have real-time transcription and translation features out of the box. However, it’s possible to create Whisper-powered solutions that enable near-real-time transcription. Such application is invaluable for international conferences and cross-border partnerships.

Customer support

Whisper lets you improve customer service by handling inquiries in multiple languages more effectively. It can transcribe customer calls for later analysis and compliance reports. You can also apply Whisper capabilities to translate customer requests in real-time.

Content creation

Whisper simplifies content creation for podcasters and video creators. It can automatically transcribe episodes for editing, SEO purposes, or providing subtitles to viewers. With the developers' help, you can also enable real-time captioning for live events.

Language learning

If you’re running language learning courses or want to create a training plan for your team, Whisper could be the right choice. It allows you to analyze students’ speech and help them improve their communication skills.

Voice verification

You can improve your security systems by using Whisper speech recognition capabilities for authentication. Voice verification is typically used alongside other authentication methods in financial and healthcare institutions to authorize commands for smart home devices and access control systems in secure buildings.

Linguistic research

Whisper is also used in academic or commercial research to study speech patterns, dialects, language evolution, or the development of new language models.

Legal and medical transcription

You can create high-quality transcription services for legal proceedings, medical recordings, or any professional setting where accurate transcription is crucial.

How Whisper differs from other large speech-to-text services

You may wonder why use the OpenAI Whisper model if Google Speech-to-Text and Amazon Transcribe are available. Here are some insights to help you choose between these models.

Whisper supports nearly the same number of languages as Google Speech-to-Text and Amazon Transcribe.

Whisper outperforms competitors in accuracy, especially in multilingual and noisy environments.

OpenAI offers an open-source solution with flexibility in deployment and use, while Google and Amazon's solutions are optimized for their cloud platforms.

Whisper has strong customization potential, while Google and Amazon offer more out-of-the-box solutions for specific use cases like call centers or media production.

Whisper is free to use since it’s open-source, but running its features requires computing resources, which come with their own costs. Google and Amazon offer pay-as-you-go services with different rates for different types of transcription.

What are Whisper model’s limitations?

While OpenAI Whisper has many advantages, it also comes with some limitations you should be aware of.

- Whisper better handles English recordings and languages with more training data. This may lead to lower accuracy for less common languages and dialects.

- The model is trained on a broad dataset, but sometimes, it’s insufficient for very specialized vocabularies. In such cases, you must further fine-tune or integrate the model with relevant language models.

- Whisper wasn't primarily designed for real-time audio processing. To achieve near-real-time transcription or translation, you need additional optimization.

- The most accurate Whisper models (like the large version) are also the slowest and largest. This makes them less suitable for applications where speed and resources are critical.

- Whisper is not entirely error-free and might transcribe words incorrectly, misinterpret context, or generate additional information that doesn’t match the original audio. The latter error is known as AI hallucinations.

How to use Whisper to overcome its limitations

To integrate Whisper into your business infrastructure, we recommend following some best practices that will allow you to address errors.

Post-processing might include additional text analysis either by using language models or through human review.

Fine-tuning Whisper for specific data and use cases will better align the model with the expected vocabulary and context.

Quality audio input will reduce the percentage of the model’s misinterpretations and incorrectly transcribed words.

User feedback will help continuously adjust and improve the model’s performance.

Combining with other models lets you expand the Whisper application to any desired extent.

Setting up confidence thresholds for Whisper’s output could alert you of low confidence in transcription to additionally review it.

Conclusions

To sum up, OpenAI created a powerful ASR model with vast opportunities for customization and adaptation for specific business use cases. Whisper successfully transcribes and translates human speech with minimum errors. Nevertheless, as with any complex system, Whisper has its limitations. You can address them with a professional AI development team, meticulous testing, and by following the best AI integration practices described in this article.

If you’re looking for a team to incorporate Whisper into your business, contact the DigitalSuits team using the form. Our specialists have hands-on experience with OpenAI integration, and we’re ready to assist you in this impressive journey.

Frequently asked questions

What are Whisper hallucinations, and why do they happen?

Whisper sometimes adds text to output that wasn’t originally in the audio input. This mostly happens in noisy environments or when speech is unclear. The model may interpret the context incorrectly, fill in the gaps in the speech, or produce irrelevant outputs based on patterns it learned from training data.

Are there complementary libraries to OpenAI Whisper?

Yes, you can use libraries relevant to your use case, such as WhisperX, Faster Whisper, Whisper Jax, Stable Timestamps, Pyannote Audio Diarization, and Insanely Fast Whisper.

Should I run OpenAI Whisper on GPU?

Using GPUs could speed up transcription. However, consider GPU expenses before using them for your project.

Was this helpful?

0

No comments yet