Have you ever wondered how companies can use AI models in their applications without spending countless months building infrastructure from scratch? For many companies, AI development used to mean meticulous server management, scaling issues, and security risks, but that was before Hugging Face Inference Endpoints.

Hugging Face Inference Endpoints help you deploy machine learning models at scale without the headaches of manual configuration or deep DevOps knowledge. Whether your project is a chatbot, image classifier, or generative AI tool, they ensure seamless deployment.

In this article, we’ll walk you through what Hugging Face Inference Endpoints are, their benefits, supported machine learning tasks, their security measures, and real-world use cases, so you can decide whether they’re the right fit for integrating AI into your applications. To learn more about the platform itself, check out our guide on what Hugging Face is and how it can benefit your project.

TL;DR: Hugging Face Inference Endpoints – Deploy ML models without infrastructure hassles

Hugging Face Inference Endpoints are managed APIs that make it easy to deploy and scale ML models directly from the Hugging Face Hub or your own models.

The key benefits include scale-to-zero cost savings, autoscaling infrastructure, ease of use, customization, production-ready deployment, and more.

Models are served securely, with TLS/SSL encryption, support for authentication, and private network access via AWS PrivateLink.

Hugging Face is SOC2 Type 2 certified and GDPR compliant, making it enterprise-ready to be deployed.

What are Hugging Face Inference Endpoints

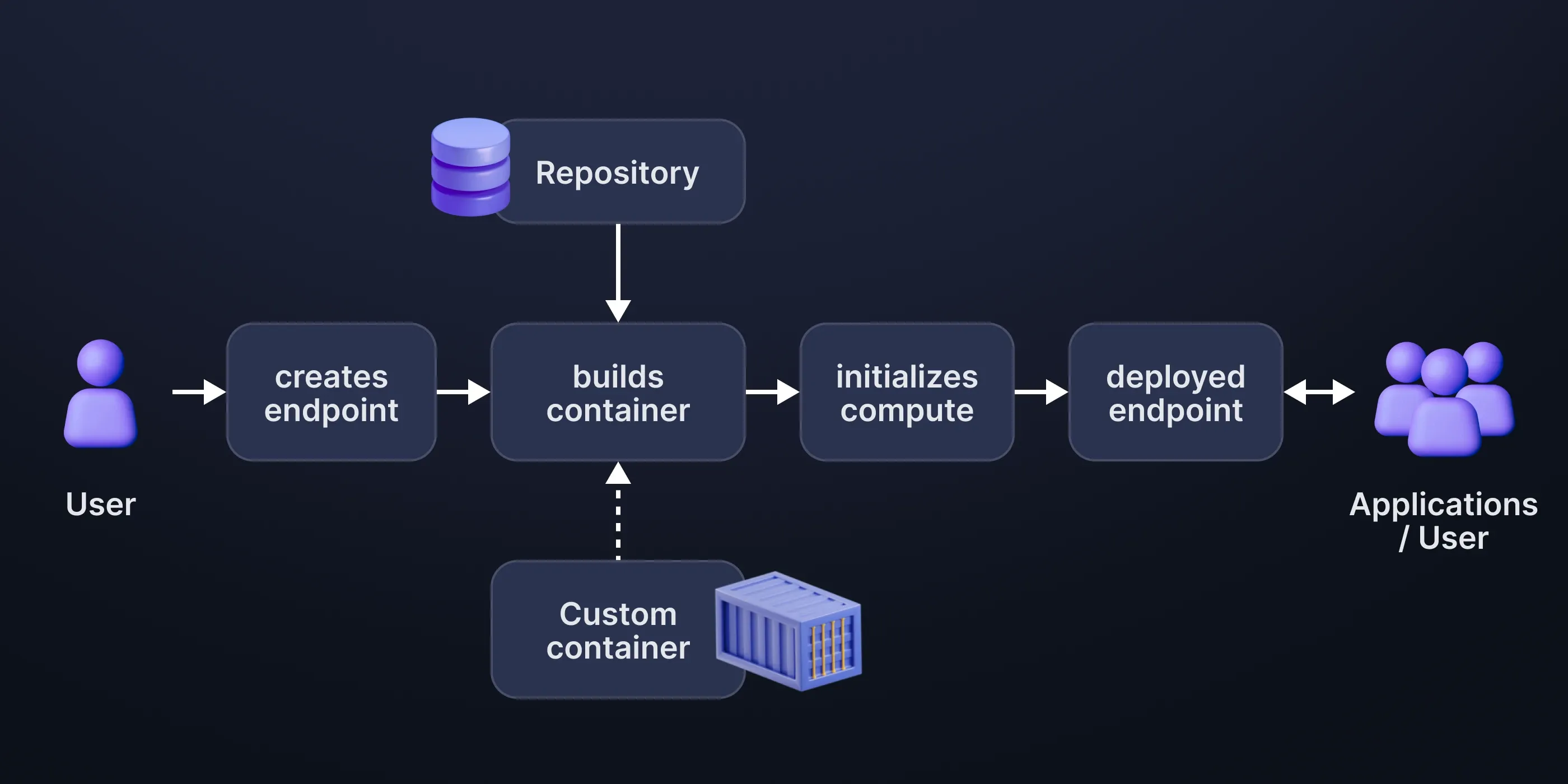

Hugging Face Inference Endpoints are managed APIs for deploying machine learning models from the Hugging Face Hub or custom models. They provide a scalable REST API with no server setup, Docker files, or manual deployment required.

All endpoints run on autoscaling infrastructure managed by Hugging Face, with reliability and security assured. Models are deployed from a repository in the Hub, with container images decoupled from source code for improved security.

Benefits of Hugging Face Inference Endpoints

Hugging Face Inference Endpoints offer a range of benefits that make it easy, scalable, and secure to deploy machine learning models.

Production-ready deployment

Inference Endpoints enable you to deploy models directly from the Hugging Face Hub in a matter of clicks. They are fueled by dedicated, high-performance infrastructure that hosts your models continuously and makes them available for production settings. No server setup or deployment pipelines to handle – nothing to worry about.

Autoscaling and cost efficiency

The site automatically controls resource usage against request volumes, provisioning resources for optimal performance at peak loads, and conserving costs during low-traffic periods. Autoscaling is boosted by scale-to-zero functionality, in which endpoints can even be shut down during zero-load periods, making deployments cost-effective for apps with fluctuating traffic.

Ease of use and integration

Hugging Face Inference Endpoints are designed to be user-friendly. Simple CLI commands and an easy-to-use interface make deploying models fast and easy. Integration into apps is effortless, thanks to REST APIs that are easy to call from any context.

Comprehensive task support

The platform natively supports various ML tasks, such as NLP, vision, and audio tasks. It encompasses all the big model types in the Hugging Face ecosystem, so it is simple to deploy models for text classification, question answering, image classification, etc. Multi-model endpoints also support dynamic inference over multiple models.

Customization and flexibility

Alongside native tasks, users can upload custom container images and specify custom inference handlers to enhance or tailor inference logic. This allows organizations to integrate proprietary algorithms, perform sophisticated pre- or post-processing, or support niche use cases not natively supported.

Advanced security and compliance

Your data protection is the highest priority. The endpoints support TLS/SSL encryption, role-based access controls, private network capabilities like AWS PrivateLink, and certifications like SOC2 Type 2 and GDPR. They are built to protect sensitive data and live up to high security standards.

Detailed monitoring and management

The service provides detailed logs, metrics, and version controls to support teams in seeing endpoint performance, debugging issues, and updating without downtime. These operational insights ensure that your model deployments are reliable, efficient, and simple to maintain.

Supported Machine Learning tasks

Hugging Face Inference Endpoints offer seamless support for a wide range of machine learning tasks through the Transformers, Sentence-Transformers, and Diffusers libraries. Regardless of whether you are working with natural language processing, computer vision, or audio processing, you can deploy models for the most common tasks easily.

Here are the supported tasks:

Text to image (generate images from text prompts)

Text classification (classify text into categories such as sentiment or topics)

Zero-shot classification (perform classification without task-specific training data)

Token classification (named-entity recognition and similar tasks)

Question answering (extract answers from context passages)

Fill mask (predict missing words in a sentence)

Summarization (condense lengthy text into shorter summaries)

Translation (translate text between languages)

Text-to-text generation (generate new text based on input, e.g., rewriting)

Text generation (produce coherent text continuations)

Feature extraction (extract features from text for further analysis)

Sentence embeddings (generate vector representations of sentences)

Sentence similarity (measure how similar two sentences are)

Ranking (sort items based on relevance or similarity)

Image classification (recognize objects or scenes in images)

Automatic speech recognition (transcribe speech to text)

Audio classification (classify audio clips)

Object detection (detect objects within images)

Image segmentation (segment parts of an image)

Table question answering (extract answers from tabular data)

Conversational models (build chatbots and dialogue systems)

Custom tasks (define your own model and task logic with custom handlers)

On top of that, custom inference handlers allow for more advanced tasks, like speaker diarization or special custom logic for advanced pipelines. Handlers offer total control of the whole request process, from input pre-processing to result post-processing.

Hugging Face Inference Endpoints security measures

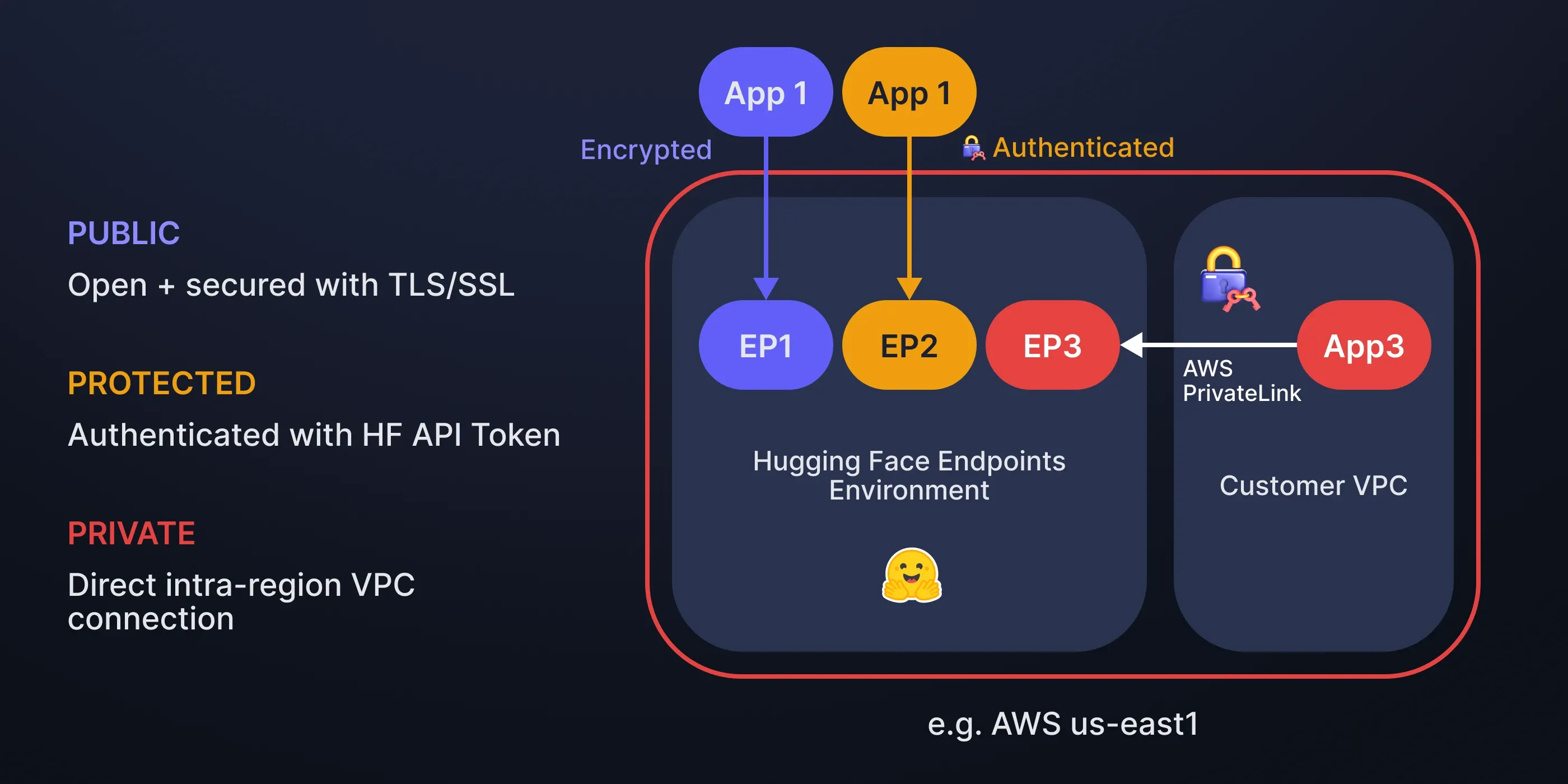

Hugging Face Inference Endpoints offer three security levels, balancing accessibility and protection:

Public. Accessible from the internet via TLS/SSL encryption. No login necessary.

Protected. Similarly internet-accessible and TLS/SSL-secured, but needs to be authenticated with a Hugging Face token.

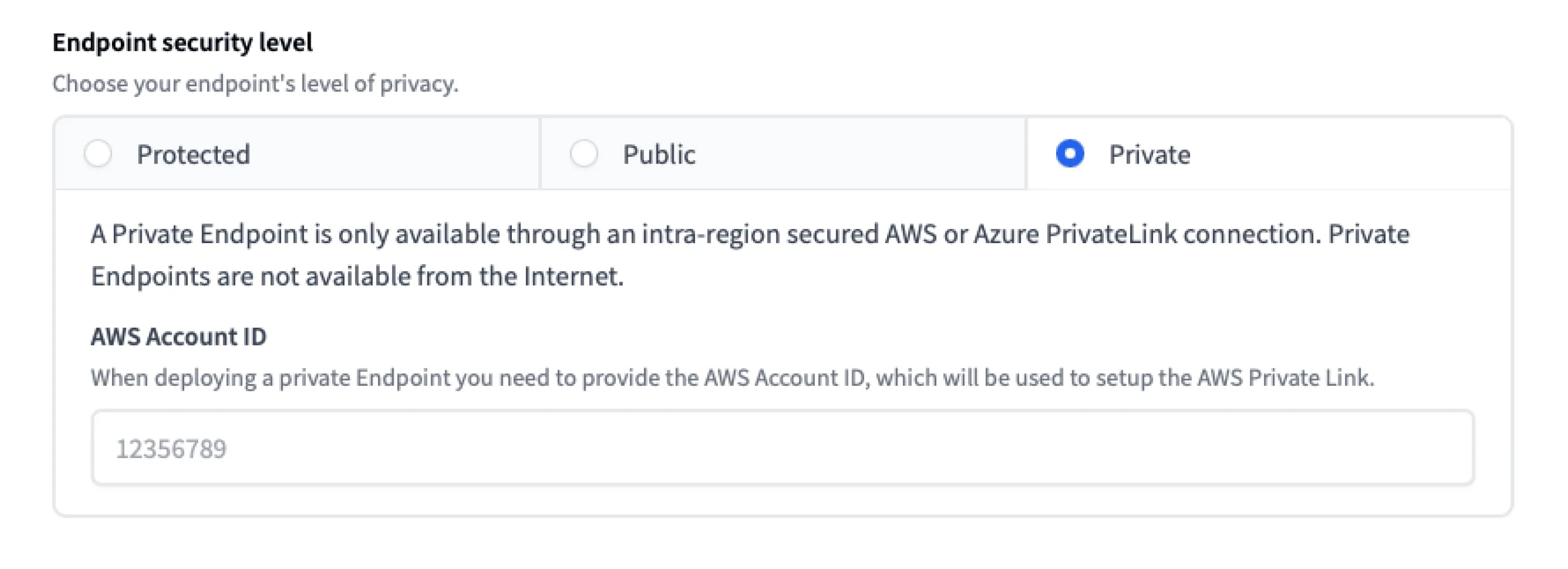

Private. Accessible only via an AWS or Azure PrivateLink secure connection in your region. No internet access needed.

In Private Endpoints, you need to give your cloud account ID to grant access. Public and Protected endpoints are easy to configure with no extra effort.

The platform includes a broad set of security functions that protect your data, models, and infrastructure at all levels. The important security features provided are:

Data encryption in transit

Using TLS/SSL protocols, all information shared between clients and Hugging Face Inference Endpoints is secured. It ensures that inputs, outputs, and associated data aren't compromised during transmission. Encryption in transit is a standard security measure that secures data moving across networks, both internal and external.

Data privacy and retention

Hugging Face adheres to a strict data privacy policy. They do not retain your payloads or request tokens after the 30-day log retention period, and data used during inference is only held temporarily for operational purposes. Furthermore, the platform endorses and assists private and protected endpoints to minimize the exposure of data prudently.

Authentication and account security

Endpoints can be protected with authentication tokens, which means users must provide valid API keys or tokens to make calls. This safeguards against unauthorized attempts and ensures that only designated users invoke your models. Team and organization-level permissions can be administered through Role-based Access Control (RBAC), which Hugging Face also supports.

Role-based access control

RBAC allows the organization to define a set of policies and assign specific roles to defined team members. Assigned roles would restrict any access to a model, data, and endpoints based on devised organizational policies. This feature facilitates internal security safeguards because fewer employees who can deploy, modify, or invoke models means reduced exposure to internal risk.

Private network access

Hugging Face also supports your endpoints being connected through private network options like AWS PrivateLink or Azure PrivateLink for higher security needs. This creates a secure private connection within your cloud that restricts access to the endpoint from the public internet. Sensitive projects that require strict data control would benefit most from this setup.

SOC2 Type 2 certification

Hugging Face has achieved SOC2 Type 2 certification, confirming that its security practices are within accepted industry benchmarks. This certification involves independent audits and assessments, thus showing trust in the company’s efforts towards a secure environment, as well as data safety and privacy.

GDPR compliance

Hugging Face complies with the General Data Protection Regulation and thus meets the requirements for handling data in relation to European privacy regulations. This means that it maintains strict guidelines in handling European privacy data, such as proper collection, processing, storage, and maintenance. The platform also offers options for processing agreements and other compliance patterns to meet GDPR standards.

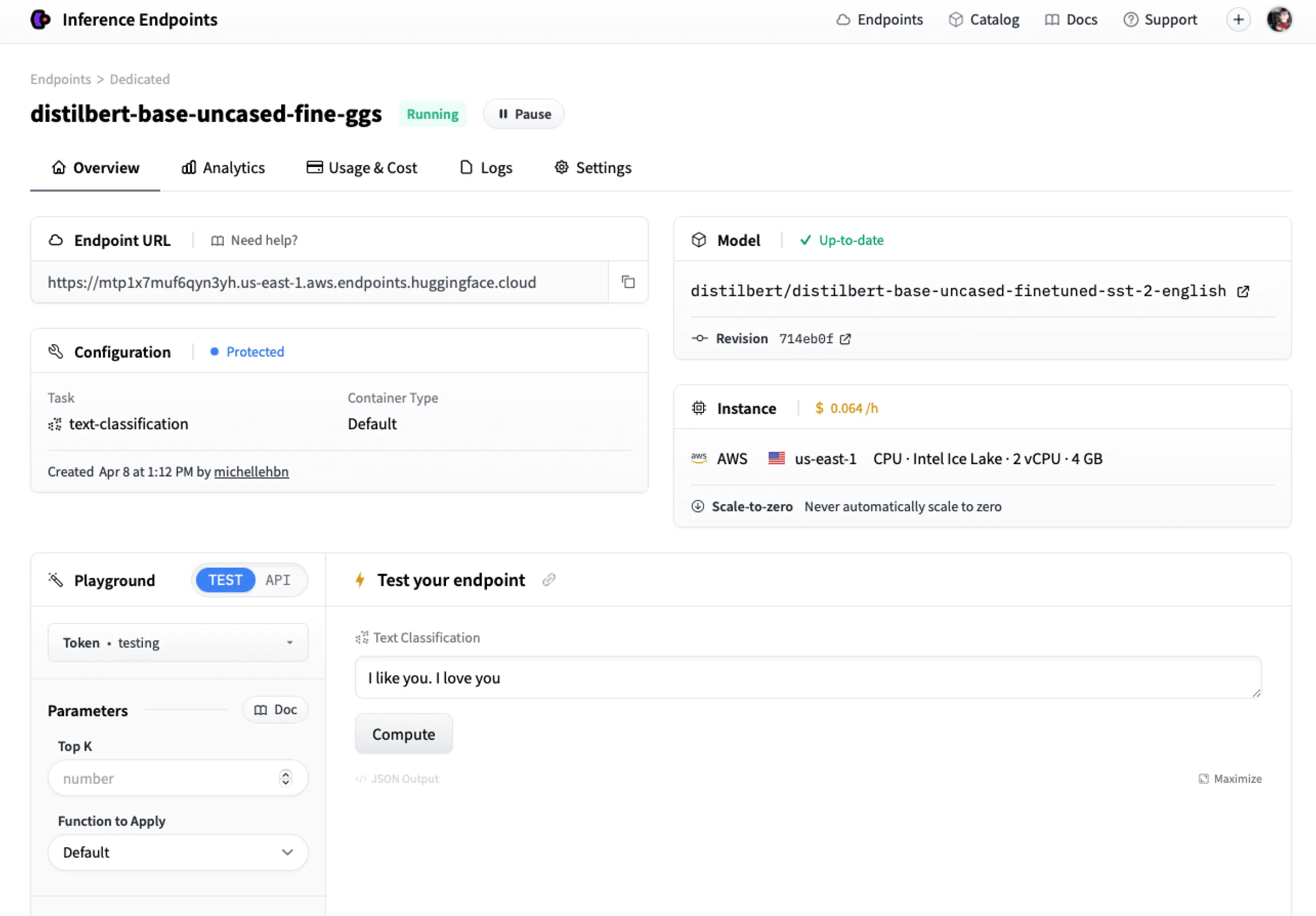

How to create an Endpoint

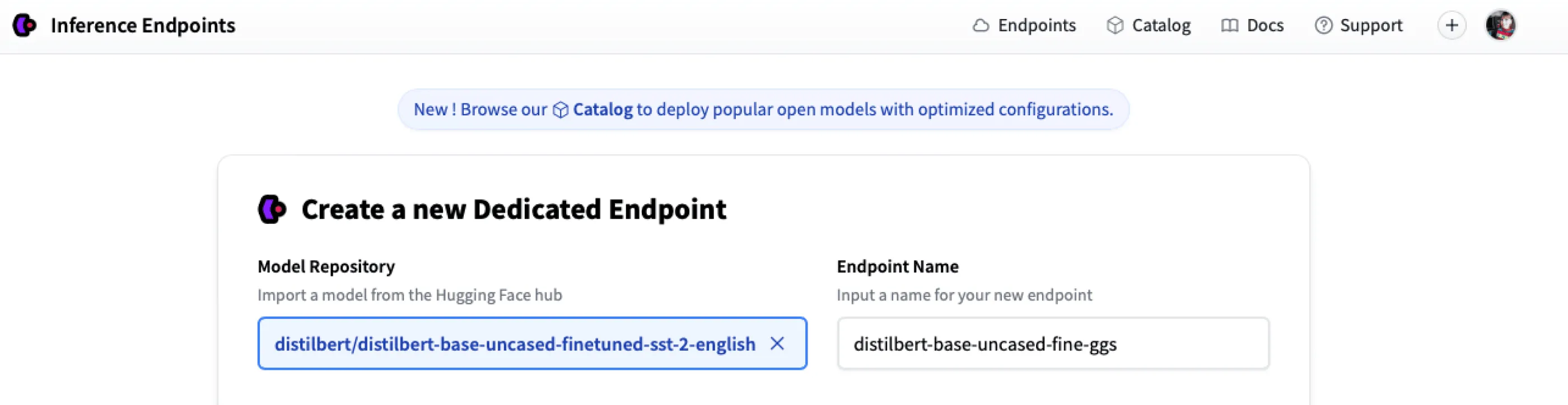

After logging in for the first time, you get directed to the Endpoint creation page. As an example, we will examine steps to deploy distilbert/distilbert-base-uncased-finetuned-sst-2-english for text classification.

- Enter the Hugging Face Repository ID and your preferred Endpoint name

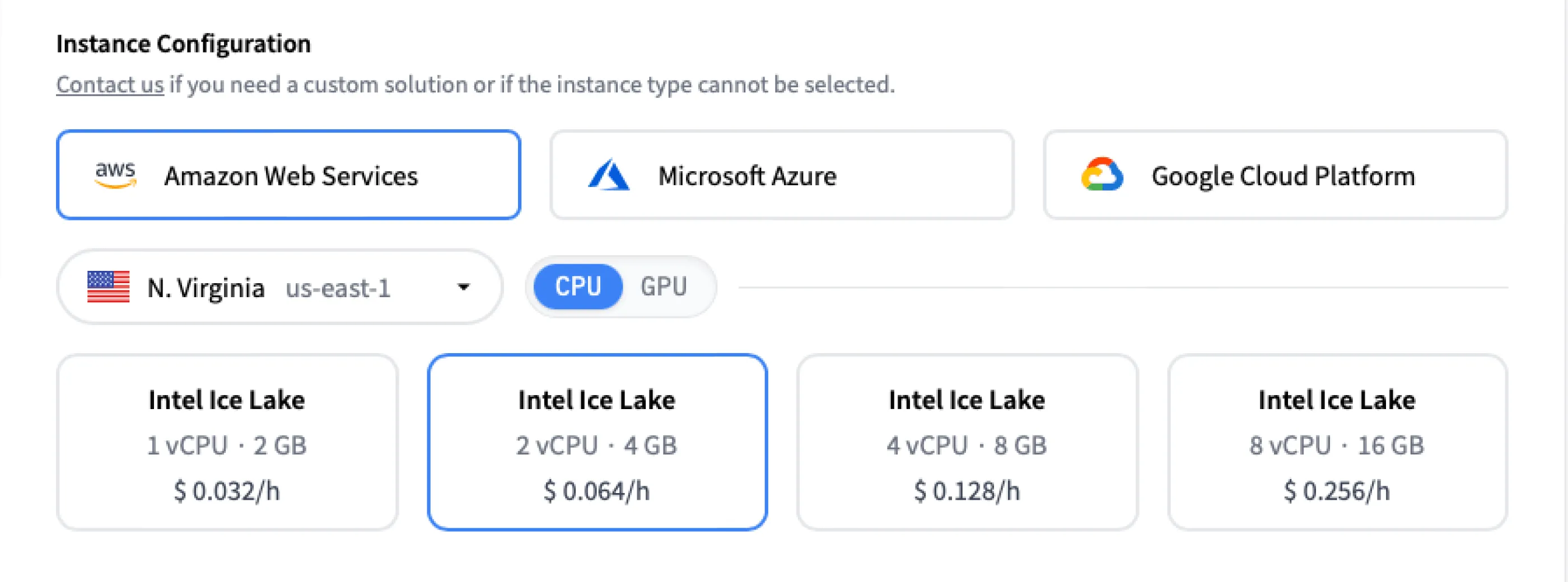

- Choose your Instance Configuration

Select cloud provider, region, and instance type. If your desired options aren’t available, contact the support service.

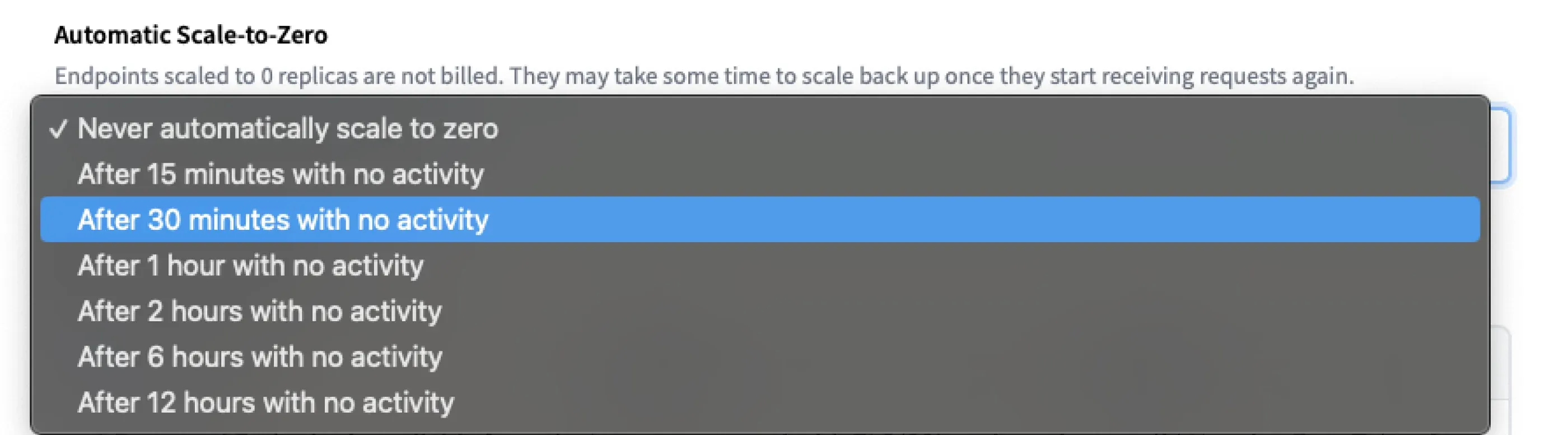

- Apply or leave the Automatic Scale-to-Zero setting as is

- Choose the Security Level

- Set your Endpoint

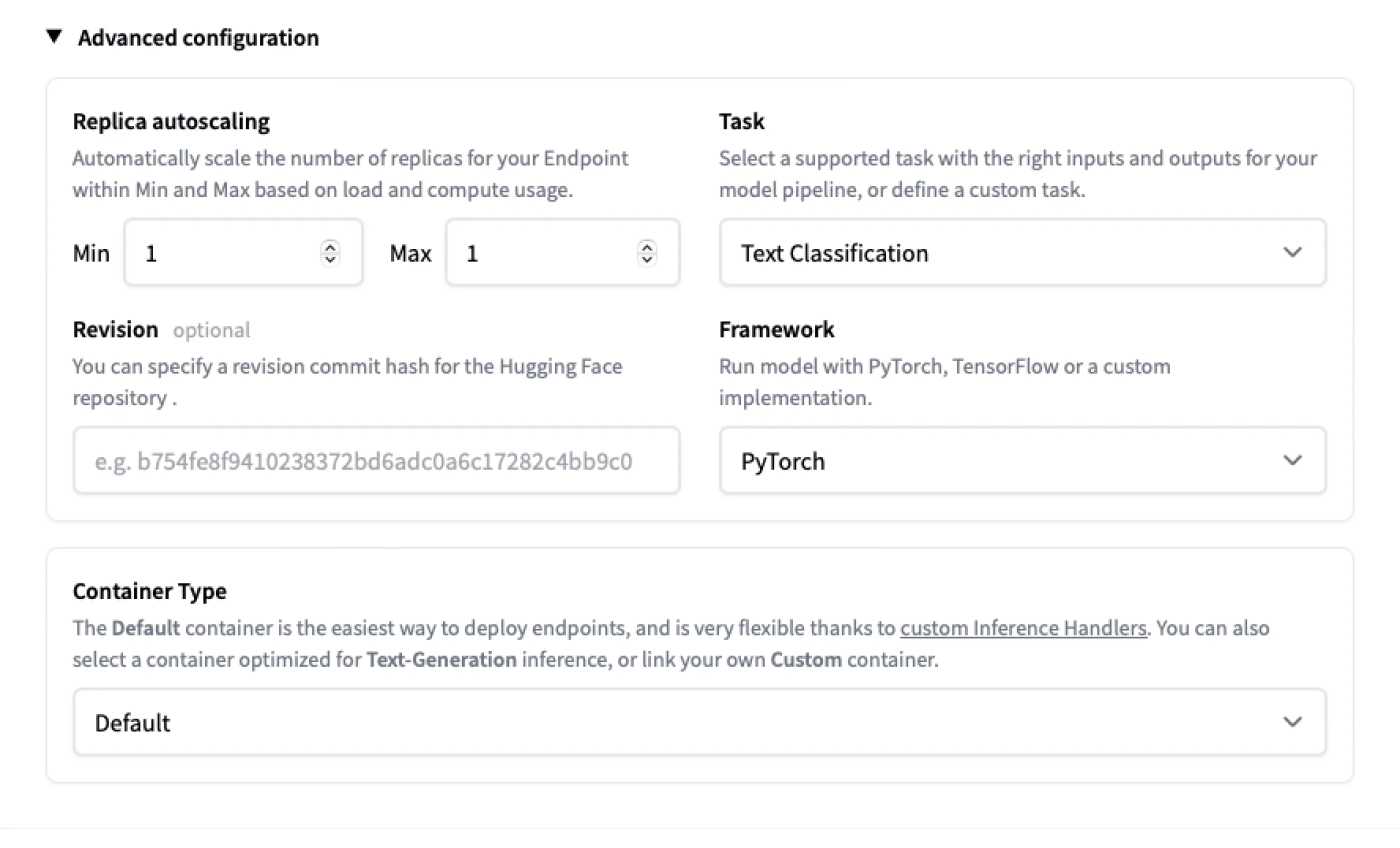

In the Advanced Configuration section, customize settings: replica autoscaling, task, revision, framework, and container type.

- Create Endpoint

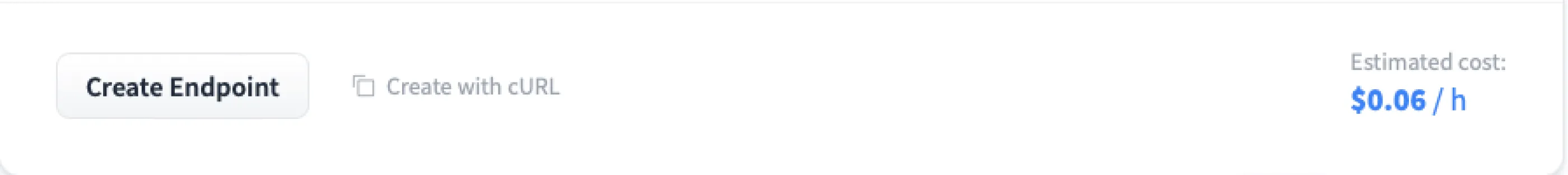

Click Create Endpoint. The estimated cost is per hour and does not include autoscaling.

- Build, initialize, and run your Endpoint

The initialization time depends on the model size and may take 1-5 minutes.

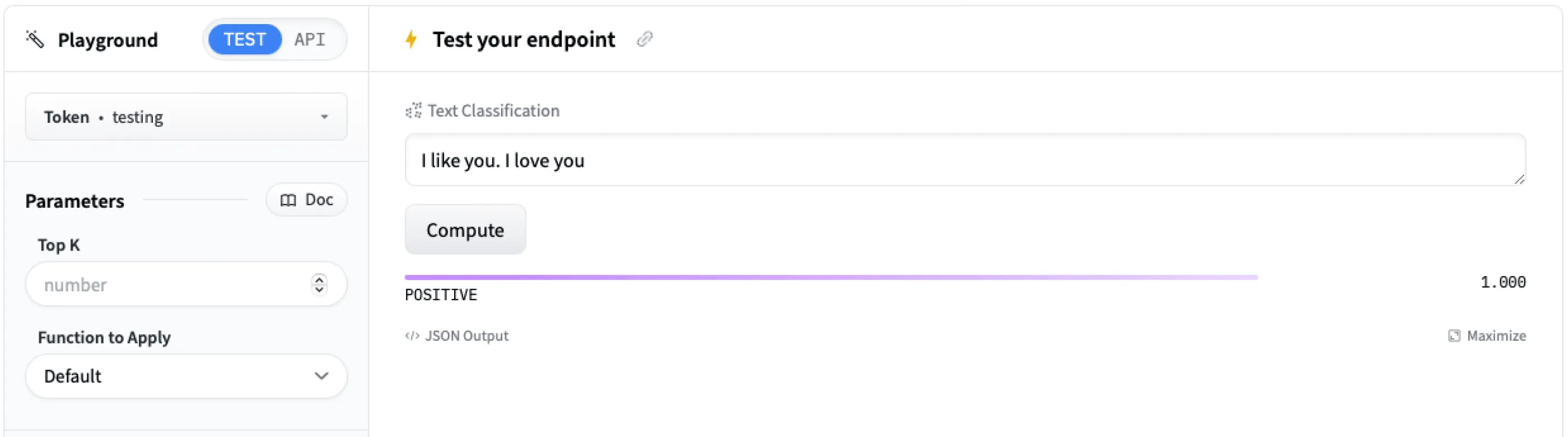

- Test your Endpoint

Test Endpoint in the Endpoint Overview utilizing Playground.

How to update your Endpoint

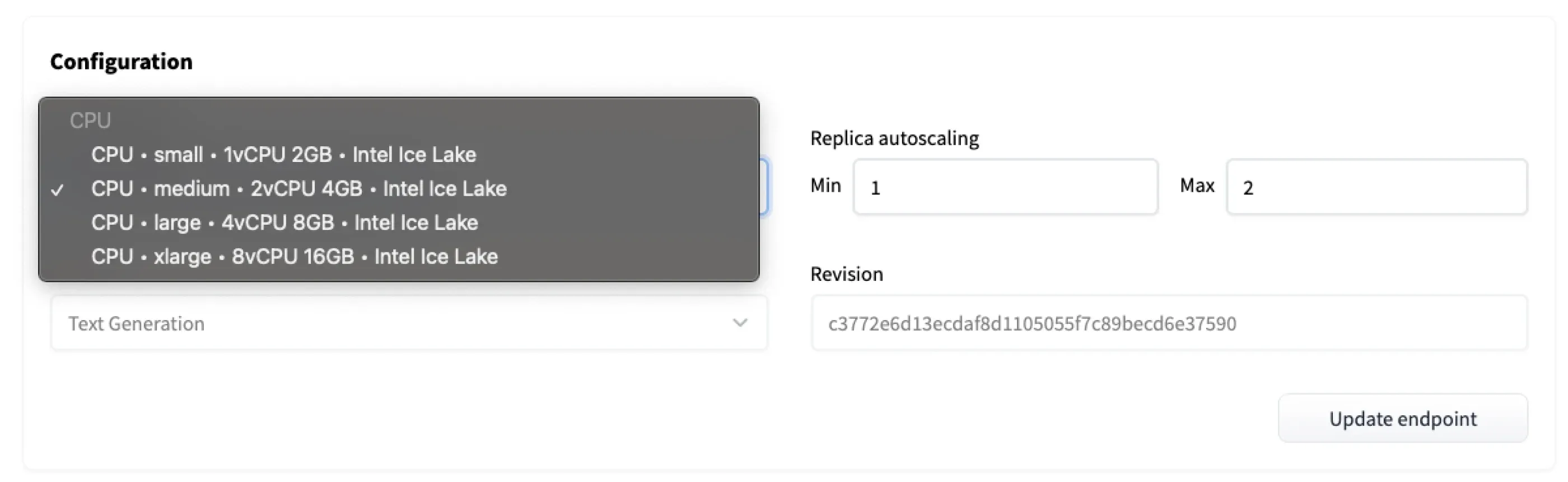

You can update a running Hugging Face Inference Endpoint directly from the Settings tab. This allows you to adjust several key configurations without redeploying – unless the Endpoint is in a failed state, in which case you’ll need to create a new one.

What you can update:

Instance size. Change to a larger or smaller size within the same type (CPU-to-CPU or GPU-to-GPU only). Note that the Instance type (e.g., CPU to GPU) cannot be changed on an existing Endpoint.

Autoscaling. Adjust the minimum and maximum number of replicas

Task. Switch the pipeline task your Endpoint uses

Revision. Choose a different version of your model repository

Hugging Face Inference Endpoints Pricing

When you create an Endpoint, you choose an instance type to deploy and scale your model on an hourly plan. Inference Endpoints require an active Hugging Face subscription and a linked credit card. You pay for computing resources used while your endpoints are running, which are billed by the minute, not the hour.

Plans start at:

CPU Instances: $0.032/hour

GPU Instances: $0.50/hour

Accelerator Instances: $0.75/hour

This is how you get charged:

instance hourly rate * ((hours * replicas) + (scale-up hours * additional replicas))

Check the official Pricing page for the latest available instance types and detailed pricing.

Hugging Face Inference Endpoints – real-world success stories

Let's see the real-world success stories that showcase the different uses and benefits of employing the powerful Hugging Face Endpoints.

1. Phamily

Phamily, a healthcare technology company, leveraged Hugging Face Inference Endpoints to develop HIPAA-compliant, secure endpoints for text classification workloads using custom models acquired from MPNET and BERT.

The solution enabled Phamily to improve patient health outcomes through intelligent care management with significant operational overhead cost savings. It saved Phamily's software engineers about a week of development time per deployment, and therefore allowed the team to focus on research and development rather than infrastructure maintenance.

2. Pinecone

Pinecone, a leading vendor of vector database solutions, uses Hugging Face Inference Endpoints to power autoscaled endpoints for fast generation of embeddings. With some sentence transformers and embedding models, Pinecone serves over 100 requests per second with a few clicks.

Such scalability and ease of deployment have led Pinecone to set a new standard for building vector embedding-based solutions, such as semantic search and question answering models, without the heavy burden of infrastructure setup.

3. Capital Fund Management (CFM)

Capital Fund Management (CFM) is a quantitative investment firm that used Hugging Face Inference Endpoints to execute open-source large language models (LLMs) for data analysis and extraction of data from structured data.

CFM processed large data sets efficiently using the asynchronous client capabilities of the SDK provided by Hugging Face by making various concurrent requests to their endpoints and receiving real-time structured output. The feature helped in their process of normalizing and extracting company names from financial reports, showing the utility of the endpoints for high-throughput data-intensive tasks.

The bottom line

Hugging Face Inference Endpoints are changing the game for how businesses deploy their machine learning models and manage them at scale. The machine learning teams and engineers can now concentrate on optimization, usage, and management of the model, as infrastructure is handled on the backend. With deployment support for various tasks and the strongest protective policies like encryption, privacy controls, and compliance, Hugging Face makes operationalizing AI easier than ever.

Be it NLP, computer vision, or just custom AI solutions, Inference Endpoints provide businesses with cutting costs while streamlining the entire deployment process, ensuring confidentiality of data. As AI integrations become a core part of business functionalities, Hugging Face is an optimal companion for structures focused on compliance and security.

At DigitalSuits, we specialize in integrating AI into projects effortlessly and efficiently. Get in touch with us to see how we can assist you in successfully bringing AI into your business.

Frequently asked questions

Is my data secure when using Inference Endpoints?

Yes, all data transmitted to and from the endpoint is encrypted in transit using TLS/SSL, ensuring data privacy and security.

How can I monitor and manage my deployed Inference Endpoints?

You can monitor your endpoints through the Hugging Face web application, which provides access to logs and a metrics dashboard, or manage them programmatically using the huggingface_hub Python library.

Which machine learning tasks and models are supported?

Inference Endpoints support all Transformers, Sentence-Transformers, and Diffusers models, as well as custom tasks using user-defined inference handlers or custom containers.

Was this helpful?

0

No comments yet